Cycloid on AI in platform engineering: acceleration, or technical debt on fast forward?

This is a guest post for the Computer Weekly Developer Network written by Benjamin Brial, founder, Cycloid.

Brial writes in full as follows…

As AI continues to infiltrate every corner of the enterprise, the pressure is on platform engineering teams to figure out if and where, it belongs.

Many are now asking: can AI build Internal Developer Platforms (IDPs)? Can it operate them? Should it?

At first glance, the proposition is appealing. Automating repetitive tasks, accelerating code generation, simplifying decision-making. Who wouldn’t want that? But the reality is more complicated, especially when you move from AI demos to enterprise-scale use cases.

As someone who’s spent over a decade working with DevOps and platform teams and leading a company focused entirely on making platform engineering scalable and sustainable, I’d argue we need to be far more measured about the role AI plays in our platforms.

In short: AI is not the future of platform engineering. At least, not yet.

The illusion of transformation

One of the biggest misconceptions I see is the assumption that AI will revolutionise the way we create and manage IDPs. The truth is, most of the problems AI is being pitched to solve have already been tackled (in many cases, more effectively) using existing tools and human processes.

Take infrastructure code generation.

Tools like TerraCognita have been automatically generating Terraform from cloud resources for years. It’s been part of our toolkit at Cycloid since long before the term ‘GenAI’ entered the tech lexicon. Does AI offer a more conversational interface for this task? Maybe. But is it fundamentally better? Not really.

The challenge in platform engineering isn’t generating code snippets; it’s in designing scalable and maintainable systems. It’s in dealing with edge cases, dependencies, policies, user experience and organisational complexity. These are not areas where AI shines today. In fact, AI often introduces new risks in these domains because, right now, it simply can’t understand the complexity of the human relationships that affect every organisation’s decision-making.

AI-generated platforms don’t scale (yet)

AI can speed up prototyping. We’ve all seen it generate a quick MVP or a CI/CD pipeline with a few prompts. But once that code enters production pipelines, it needs to be battle-tested, audited, secured and maintained, often by humans who didn’t generate it.

In platform engineering, the devil is in the details.

A poorly configured IAM policy, an over-permissive Kubernetes manifest, or a non-standard logging convention can snowball into very serious incidents. And if the AI-generated infrastructure doesn’t follow your team’s internal standards, you’re left rewriting and refactoring just to get it into shape.

That’s not acceleration, it’s technical debt on fast-forward.

Human context > statistical predictions

The strength of a good platform engineer isn’t just their ability to write YAML. It’s in making smart, context-aware decisions based on the unique needs of the organisation, the maturity of its teams and the realities of its infrastructure.

That level of judgment doesn’t come from a large language model (LLM). It comes from experience.

If we start treating AI-generated outputs as authoritative, we risk deskilling our teams and losing the human intuition that makes platform engineering so valuable in the first place.

The environmental cost no one talks about

There’s another aspect that’s often overlooked: the energy cost. Every interaction with a GenAI tool involves running large-scale compute operations, often on carbon-intensive infrastructure. Multiply that across hundreds of developers using AI-enhanced tooling daily and the footprint becomes significant.

For an industry already grappling with tool sprawl and sustainability challenges, introducing another high-energy, cloud-dependent layer is not a decision to take lightly.

Platform engineering should be about simplifying complexity, reducing waste and making operations more sustainable. Adding carbon-hungry LLMs to every workflow is not aligned with those goals.

Where AI can help

This is not to say AI has no place. There are real use cases where it can provide genuine value in the context of platform engineering, provided we approach them with the right expectations.

For example, documentation and onboarding are areas where AI can genuinely streamline workflows. Platform teams often struggle with the time-consuming task of keeping documentation up to date or making complex internal architectures intelligible to new team members. Here, GenAI can help summarise system behaviour, auto-generate documentation from code or config files and reduce the learning curve for developers entering unfamiliar environments.

AI is useful in navigating complex workflows, particularly within sprawling toolchains or layered IDPs. For developers unfamiliar with the nuances of internal tooling, AI-powered assistants can act as a helpful first point of contact, surfacing relevant scripts, best practices, or platform capabilities in natural language, without requiring them to dig through endless wiki pages or Slack threads.

AI is useful in navigating complex workflows, particularly within sprawling toolchains or layered IDPs. For developers unfamiliar with the nuances of internal tooling, AI-powered assistants can act as a helpful first point of contact, surfacing relevant scripts, best practices, or platform capabilities in natural language, without requiring them to dig through endless wiki pages or Slack threads.

Another benefit lies in the creation of reusable stack components. AI can help accelerate the design of standardised platform modules by suggesting boilerplate configurations, integrating predefined security policies, or generating templated pipelines. If used carefully and with a layer of human review, this reduces repetitive work and promotes more consistent standards across teams.

These use cases are worth exploring. Right now, AI can support the human experts who build and maintain IDPs, not replace them.

Sustainable, human-centric platforms

Platform engineering is not a buzzword. It’s a long-term capability grounded in reliability, governance and good abstraction. If we rush to inject AI into every part of the stack without careful evaluation, we’ll dilute that foundation and end up with platforms that are harder to understand, maintain and trust.

So, what is the future? I’d argue it’s not prompt engineering; it is platform engineering built around clarity, reusability and human empathy. It’s understanding what devs and operations teams really need and giving it to them in a form they can own and evolve.

AI can support that mission. But it won’t lead it.

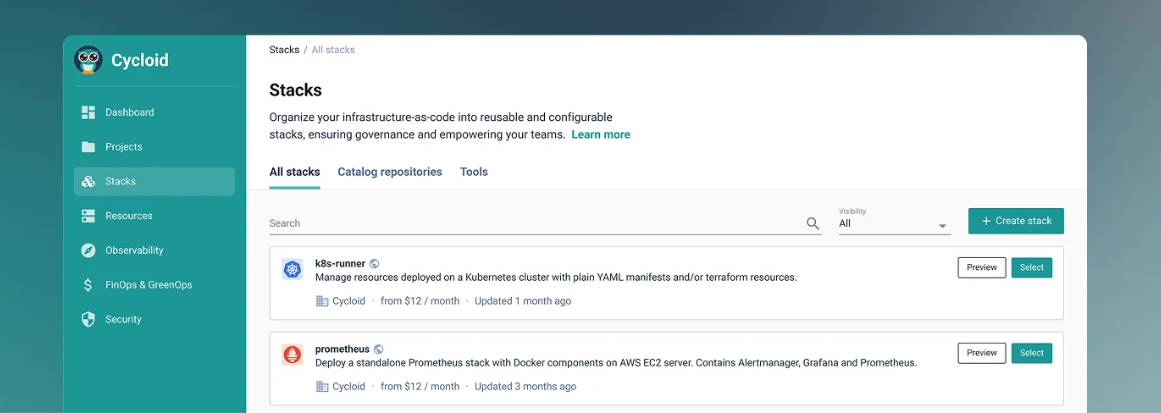

About the Author: Benjamin Brial founded Cycloid.io, a platform engineering solution with a GitOps, self-service first approach that lets organisations build sustainable, scalable Internal Developer Platforms, in 2015. With a background at eNovance and RedHat, Benjamin champions trust, transparency and upskilling in both Cycloid’s company culture and the platform’s role in improving DevX and operational efficiency to accelerate software delivery, hybrid cloud and DevOps at scale.