AI Workflows - Zencoder: Why it’s time to ‘harness’ AI agent orchestration (part 2)

This is a guest post for the Computer Weekly Developer Network written by Andrew Filev, CEO and founder of Zencoder, it follows part 1 of this analysis here.

Zencoder is known for its AI-powered coding agent that helps developers with code suggestions, unit testing, code repair and code generation. The platform’s core strengths are said to be its “deep codebase understanding” and integrations with popular development tools.

Filev writes in full as follows…

In my practice, with the right test suite, best-in-class models (like Opus or GPT5-Pro) and best-in-class harness [Filiv is too modest to mention Zencoder, but the technology would fit in this space -Ed], a skilled engineer can run an AI-first engineering process and improve their productivity 2x, which is fantastic, but not yet the 10x promise.

So how do we unlock the next level of productivity? There are two interconnected levers – autonomy and parallelisation and they both connect to one topic – orchestration.

Manual overdrive

When you have to manually supervise the process A to Z, it’s an enjoyable experience. First, you wait for the models, as the best ones take their sweet time to give you the artefact you are looking for. Then they wait for you to read the artefact and approve/correct it. It’s the world’s slowest game of ping-pong. If you can have agents reliably do parts of your conductor job, you can have bigger breaks between the times your input is needed, freeing you up to do something else.

For example, if the agent orchestrates sub-agents to execute steps of a well-defined plan, you don’t have to do it manually. Or if in the planning phase, instead of you sequentially improving the plan with AI, you could have two AI agents generate slightly different plans. The third AI agent critique involves evaluating the feasibility of both plans and merging them. This results in the first artefact being of higher quality, requiring fewer iterations and less of your time.

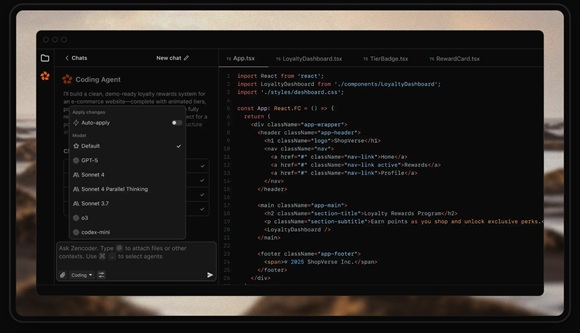

This is an emerging best practice and people are pushing the limits of AI autonomy here. Currently, they use a command line interface and scripting for those automations, but a GUI is coming soon, unlocking these best practices for more people.

AI autonomy

With agents being more autonomous and requiring less supervision in their jobs, this increases the opportunities for you to run multiple such agents in parallel, which will bring the next level of productivity.

Not only that, but when you have tuned your agent to the point where you trust it to do specific jobs at a certain level of quality, you can push those agents into trigger-based CI processes. For example, in our own company, we use Zen for CI to perform tasks such as automated AI code review and auto-resolution for JIRA tickets tagged with the agent. This is a different face of orchestration.

The control tower vision

We’re moving toward “agent control towers” i.e. platforms that intelligently route work between specialised agents, manage their interactions and provide the reliability and observability that enterprises need for mission-critical development workflows.

I believe it’s a path, rather than a jump. If someone launched a product that is 100% AI-first orchestration, nobody would understand it. People need to first understand and trust individual agents before they can conceptualise orchestrating multiple agents. The technology must start with existing habits and then rapidly co-evolve. This goes beyond building better software; we’re changing how we think and how we work. It’s a shift that demands more from us cognitively, even as it multiplies our output.

For example, writing specs comes easier to me than to some of my engineers. They’ve been writing code for the last decade and it feels second nature to them. The metaphor I use for that is rowing (a boat) faster, versus building a motorboat. When AI coding agents came to life, my engineers started rowing faster. I wasn’t that much of a rower (coder) over the last decade, so I instead was building my motorboat (experimenting with AI-first processes).

All aboard the AI motorboat

Andrew Filev, CEO & founder of Zencoder.

For a while, the engineers were way ahead of me in that race, but when my motorboat became seaworthy, they couldn’t row at that speed. Specs are slower than coding in the first hour, they become faster by the first day and dramatically safer by week two – preventing the accumulation of review debt that haunts rushed implementations. Not only that, but every month the models and agents are becoming stronger, increasing that gap.

My engineers had to stop rowing and change the way they work. Deep down, coding or spec-ing is about the same – we are builders and creators and language (be it Python or English) is just a tool. But it takes a push to change an ingrained habit. And I should note – this spec-writing isn’t necessarily easier work. When the agent handles the routine implementation, you’re left with the mental marathon of anticipating edge cases and consequences. It can feel harder, not easier, as you carry more architectural responsibility upfront.

One other adoption barrier is cost, which is a chicken-and-egg problem. As I’m using the best models, my average day of AI-first engineering costs $50+ in API costs (sometimes spiking higher if I’m working with a repository that I’m not familiar with and that doesn’t yet have a strong AI-first documentation). Most engineers and engineering leaders are accustomed to the benefits and costs of “faster rowing”, paying between $1 and $10 for a day of work (e.g., $20 – $200/month). This handicaps their agents, preventing them from adopting AI-first processes, which in turn prevents them from seeing significant productivity improvements and spending more.

What’s interesting is that if I put you on three different models – a strong baseline, a 2x more expensive one and 10x more expensive one, you will likely not see an immediate difference. But if you work in an AI-first way with the 10x one and I’ll take it away at the end of the week, you will never want to come back. Just like with the changes in habits, the budgetary allowance will likely be a rapid but gradual evolution.

While most people logically understand that when AI can do the work of a senior engineer 24/7 in an almost autonomous way, $2,000 would be a bargain price for that, they have to make the first step and go from $20 to $200 and from there, the price will go higher.

What’s coming next

We’re moving from AI-assisted coding to AI-first engineering. This is still early days. We’re all figuring out the patterns, failure modes and best practices… and working on large production repositories still requires strong engineering judgment – AI amplifies expertise rather than replacing it.

Engineers who first master those skills and managers who first implement those processes, are in demand on the job market. Companies that master orchestration gain massive advantages, threatening their competitors – and the orchestration platforms that succeed are learning from real-world deployment challenges and are moving in lock step with the customers.