AI Workflows - Zencoder: Why it’s time to ‘harness’ AI agent orchestration (part 1)

This is a guest post for the Computer Weekly Developer Network written by Andrew Filev, CEO and founder of Zencoder.

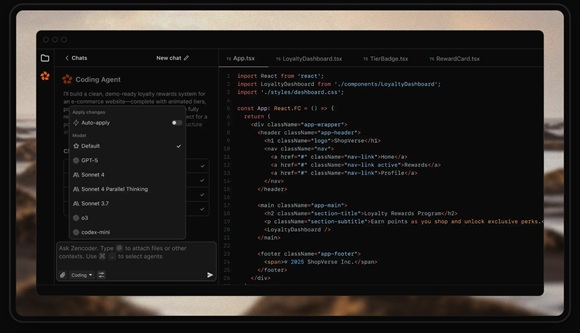

Zencoder is known for its AI-powered coding agent that helps developers with code suggestions, unit testing, code repair and code generation. The platform’s core strengths are said to be its “deep codebase understanding” and integrations with popular development tools.

Filev writes in full in part 1 of an extended analysis as follows…

We’ve all been there – obsessing over making our AI agents smarter, faster, more capable. But after months in the trenches with multi-agent workflows, I’ve realised that the target has moved. The bottleneck isn’t your code-generating agent being 80% instead of 70% accurate. It’s something far more fundamental.

An agent is a capable LLM, given proper tools, initialised with a good system prompt and told what and how to do something. Under the hood, it’s all lumped together when passed to the LLM, but in practice, thinking about these elements separately is crucial for workflow design.

What is an agentic harness?

When working with capable agents, vendors usually handle the system prompt, tools and context management through what’s called an “agentic harness” which can make or break agent performance. Our team dedicates a significant amount of resources to optimising this harness for enterprise-scale contexts.

Modern harnesses enable customisation of both instructions and tool sets. For example, our testing agent includes additional tools not available to our coding agent. If you are wondering if giving all the agents all the possible tools is a good idea, it is i.e. unused tools in large quantities will “confuse” the agent. A typical agent will perform well with about a dozen tools, while the platform might have 100+ available tools. When your plumber comes on-site, he typically doesn’t carry the same tool set as your electrician.

Tightening the harness

More importantly, LLMs are extremely sensitive to context. While 200K tokens sounds generous, you quickly fill it when editing large files, since the LLM trajectory (your “conversation history”) carries not just what you see on screen, but all tool call details. While you have your long-term memory and your short-term memory, all the model has is its training and that trajectory. When the context overflows, your harness compacts/summarises it, potentially losing crucial details or causing the LLM to misbehave.

More critical than context length is its format and relevance. LLMs’ attention mechanism makes them easily “distracted” by irrelevant information. For example, if you repeatedly mention something, it becomes harder for them to deviate from those examples and come up with something original.

What are atomic tasks?

So altogether, managing context and instructions is essential for working with agents effectively.

This is why specialised agents working on atomic tasks (discrete units of work) offer a better conceptual model than one uber-agent. Their signal-to-noise ratio is high – they get precise instructions and “goldilocks” context – not too much, not too little. This specialised agent approach naturally leads to the next challenge: how do we coordinate these focused performers?

As people have started experimenting with coding agents, more successful AI-first practitioners have quickly figured out a helpful best practice. They use an agent to do the planning job and then they use different agents to execute the plan, typically in a fresh “agentic run” for each task. This helps each agent to get that “goldilock context”.

Andrew Filev, CEO & founder of Zencoder.

They know just enough about previous and next steps to ground their thinking, but not so much to overflow the context or distract them from the job at hand. So to some degree, a developer is the director of an AI orchestra, the plan we discussed above is the sheet of music and each of those agentic runs is a musician that helps you play a coherent melody.

Those “musicians” need precise information presented in a particular way. Your code review agent needs the full diff and coding standards, while your testing agent wants requirements and edge cases.

Verify, verify, verify… then verify

In real life, working on production code, you need verification at every step. When an AI agent confidently tells you that everything looks great and you blindly trust it, you get the pleasure of a debugging session that lasts until 3 am. This is precisely why many developers remain sceptical about AI-generated code. This is where another best practice comes into play: test-driven development (or TDD), when not only do tests cover everything, but also the tests are written before the code.

There was an attempt at popularising it in the early 2000s, many engineers tried it and (almost as many) ultimately got back to the old ways of writing (some) tests after they write the code. AI agents are tireless and, with the right prompting, are ready to follow the programme, so more and more AI-first practitioners are finding their answer to the verification challenge in TDD.

If every step of the plan starts with writing acceptance tests and every AI-coding session ends with making all of those tests pass and a complete system regression, that helps to reduce the number of debugging sessions and improve the test coverage. So we have our musicians and our sheet music – next (in part 2 here) let’s talk about how to conduct this orchestra at scale.

Read part 2 of this analysis here…