Software syncopation: Rhythm & news in NTT's next LLM ‘tsuzumi 2’

As the march (let’s not say race) towards building, implementing and interconnecting intelligence functions into enterprise software stacks deepens, the palpable (but largely friendly) division between open and closed proprietary language models becomes more clearly defined.

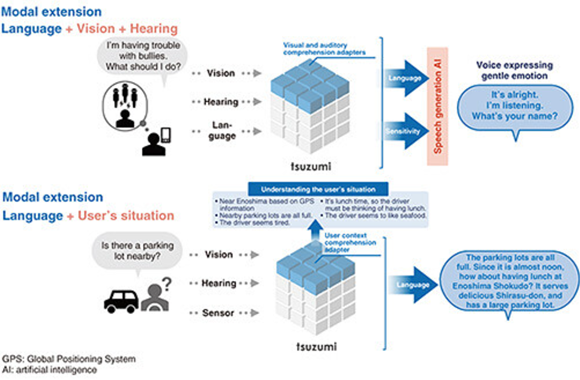

With language models now joined by large (and small) image and video models, we also now extend ourselves to so-called Large Action Models (LAMs) i.e. AI models that are built to understand human intentions and then translate those intentions into actions within a given environment or system.

Given this backdrop, we can then look to see where models are being built and created.

Of course, we know that the open model landscape is populated by tools such as Anthropic’s Claude, Meta’s Llama 3, Mistral AI’s models, Google’s Gemma, Alibaba’s Qwen and Microsoft’s Phi-4. T today.

Logically then, large corporations the size of NTT have taken it upon themselves to develop their own LLMs, which (in NTT’s case) is a more closed proprietary offering designed for applications where sensitive data flows occur and where there is a need for more defined control, compared to any open model offering.

NTT tsuzumi 2

First introduced in 2023 and now in its generation 2.0 iteration, NTT has detailed its lightweight high-performance Japanese processing LLM tsuzumi 2.

Named after a traditional Japanese drum, the new version of tsuzumi is aimed at resolving issues such as increased electricity consumption and costs associated with the widespread adoption of LLMs.

Why be a closed model?

NTT says it is actively promoting the proposal and implementation of AI solutions that are optimally tailored to public environments, closed environments and customers business models and data requirements. To enhance its AI proposal capabilities and expand its AI portfolio, NTT has added tsuzumi 2 to its lineup.

“In recent years, while attention to LLMs such as ChatGPT has surged, traditional LLMs – which require massive training data and computational resources – face challenges including increased electricity consumption, rising operational costs and security risks associated with handling confidential information. In actual business environments, we have received numerous requests from corporations and local governments to enhance the ability to understand complex documents they own and to strengthen the capacity to respond to specialized knowledge,” explained NTT, in a technical statement.

The company says that by feeding these needs back into R&D, NTT has developed the next-generation model of tsuzumi, called tsuzumi 2.

Inference on a single GPU

In operation, tsuzumi 2 inherits the existing capability of being able to perform “inference on a single GPU”, thereby keeping environmental impact and costs low.

In terms of Japanese performance, tsuzumi 2 achieves world-top results among models of comparable size. In the business domain, where the basic abilities of knowledge, analysis, instruction-following and safety are prioritised. The technology here does support other languages (English, Korean, French, German and others are mentioned), but perhaps unsurprisingly, its core base of extended functionality is seen in the Japanese language.

Specialised model development

A ‘tsuzumi’ drum.

NTT further states that RAG and “fine-tuning” have improved the efficiency of developing domain-specific models for companies and industries. But tsuzumi 2 has reinforced knowledge in the financial, medical and public sectors – areas where customer demand was high.

As a result, it delivers performance in those domains and works well (NTT said it excels) in accuracy gains achieved through specialised fine-tuning.

In addition, its generic task-specific fine-tuning capability enables versatile use across a wide range of business functions. For example, an internal evaluation at NTT that deployed tsuzumi 2 for “financial-system inquiry-handling” showed performance that matches or exceeds that of leading external models, confirming good results.

Also of note here, Tokyo Online University has been operating an on-premise, domestic-origin LLM platform that keeps student and staff data within the campus network. After confirming that tsuzumi 2 delivers stable performance for complex context understanding and long-document processing (and that it has reached a practical level for composite tasks) the university has decided to adopt tsuzumi 2.

With the launch of tsuzumi 2, NTT promises it will “systematically advance solution offerings and service implementations” from across the NTT Group.