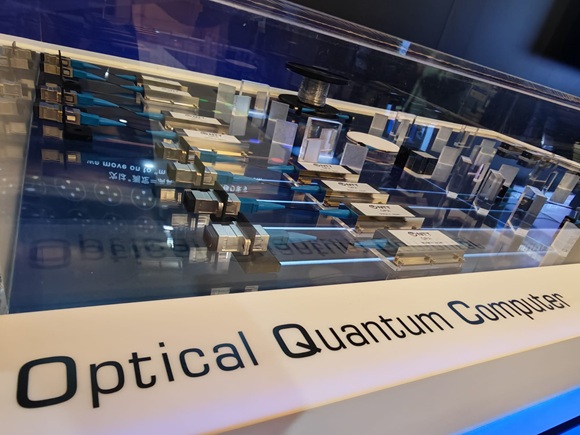

NTT & OptQC build 1-million qubit optical quantum computer

NTT and OptQC aim to build a 1-million qubit quantum computer built on light-based photonics principles (rather than electricity) to enhance the reliability, scalability and practicality of the computing systems we will use in the future… based on a five year realisation plan.

You know NTT, but do you know OptQC?

Sometimes classed as a Japanese startup, OptQC works with optical photonic technologies to develop a photonic quantum computer that uses light (in the form of photons) as the carrier for quantum information. Making use of “quantum teleportation and time-domain multiplexing” (yes, that’s an explanatory technology feature in and of itself – see end of this story) to achieve high scalability at room-temperature operation levels.

So then, how expansive is 1-million qubits?

It’s not possible to explain what 1-million qubits is equivalent to i.e. nothing else on the planet has ever been so large, fast, expansive and powerful… so there is no base factor in binary digital computing to relate this measure to.

A 1-million physical qubit machine could deliver and yield around 100 to 1,000 or more logical qubits, which means it falls into the power range required to execute and run complex quantum algorithms, such as breaking modern encryption using Shor’s algorithm.

Realisation deadline, 2030

NTT, Inc. (NTT) and OptQC Corp. this month signed a collaboration agreement to realise a 1-million qubit optical quantum computer by 2030.

Under the agreement, NTT will provide quantum error tolerance and optical communication technologies developed as part of the Innovative Optical and Wireless Network (IOWN) Initiative to OptQC’s quantum computing platform to commercialise practical, scalable and reliable optical quantum computers.

The companies announced the collaboration during the quantum leap-themed 2025 R&D Forum in Tokyo.

Staged annually, the exhibition and conference (written that way around deliberately i.e. there are more live demos of working machines and prototypes than there are glitzy keynotes, this is hard-core tech) this invite-only event hosted by NTT Group companies in Tokyo demonstrate the company’s technological stance in the fields of optical and quantum computing, artificial intelligence, digital security, mobility, infrastructure etc.

Photons, polarisation & amplitude

As you will know, in conventional classical computers, information represented by electrical signals is processed by semiconductor processors. As you may not know, in optical systems, the information is carried by light through methods based on the various physical quantities of light, such as the number of photons, polarisation and amplitude.

According to the NTT R&D Forum team, “Quantum computers are expected to solve complex problems requiring enormous amounts of time using classical computation, with use cases including new drug development, new material design, financial optimisation, climate change prediction and much more. However, quantum computers today are extremely sensitive to their environment, with even a slight noise or fluctuation disrupting their quantum state and producing incorrect results. To realise a practical and commercial quantum computer, it is necessary to realise the stable control of quantum states at a scale of 1-million qubits while correcting errors.”

Today, new quantum computing technologies require special environments such as low temperatures or vacuums, posing significant technical hurdles to practical application.

A new optical approach to quantum

Optical quantum computers, which utilise the properties of light, present what the companies promise is “a promising solution” as a new approach with low power consumption that can operate at room temperature and pressure.

The optical quantum computer under development by NTT and OptQC will combine NTT’s optical communications and quantum error tolerance technologies with OptQC’s optical quantum computer development technologies.

The companies will begin with four primary areas of joint study:

- The creation of multiplexing and error correction technologies applicable to optical quantum computers.

- The creation of use cases and the development of algorithms and software using optical quantum computers.

- The optical quantum computer supply chain.

- The practical implementation of optical quantum computers and their use cases.

As detailed over the last several years on Computer Weekly, NTT has been conducting research and development in the optical communications field for decades, including work under the IOWN Initiative.

The IOWN Initiative aims to realise an advanced communications infrastructure using optical photonic technology, enabling ultra-high capacity, ultra-low latency and ultra-low power consumption. NTT expects to apply its quantum light source, optical multiplexing (technology for simultaneously transmitting multiple optical signals over a single transmission line) and error correction technology to the development of the optical quantum computer.

Room temperature, comfortable

OptQC has successfully developed the world’s first new optical quantum computer that operates at room temperature and pressure and is accelerating efforts toward its practical implementation through projects such as the New Energy and Industrial Technology Development Organization’s “Post-5G Information and Communications System Infrastructure Strengthening Research and Development Project.”

In November 2024, NTT, the RIKEN Quantum Computing Research Center, the Quantum Control Research Team and Fixstars Amplify announced the world’s first platform for general-purpose optical quantum computing.

Looking ahead, NTT and OptQC will conduct joint research over the next five years. In the first year, the companies will begin technical studies and collaborate with additional partners to create use cases for optical quantum computing. In the second year, the companies will build a development environment; in the third year, the companies will verify use cases.

Quantum teleportation & multiplexing

So to end then… and because we know you really did want to know what quantum teleportation and time-domain multiplexing are, let’s explain that term.

We can say that “quantum teleportation” does involve the “transmission” of a qubit (a teleport device itself is not required; this is software virtualisation at a sophisticated level after all) and that transmission process transfers a qubit’s “unknown state” between locations using entangled particles and classical communication techniques, thereby destroying the original state (you knew qubits are fragile, right?). Further, time-domain multiplexing is designed to reduce hardware complexity by using a single optical channel’s “sequential time slots” to carry multiple quantum states.

Put in even simpler terms, we need to move quantum qubits to enable more distributed quantum computation (computers need networking, right?), so quantum teleportation enables that; with time-domain multiplexing, we can think of it as one single but highly manoeuvrable delivery truck capable of multiple stops and rapid turns for quantum states to travel in.

See, simple after all, right?