Pryon: LLMs know how to speak; memory directs what to say

Many of our core enterprise computing infrastructure core contrasts are changing.

Where we were concerned with CPU ‘clock speed’ and the relative performance of any given member of the Intel Pentium family back in the 1990s, the post-millennial years have seen us move to adopt cloud computing at scale, which enables us to elevate to a point where we know the cloud service provider (CSP) hyperscalers are shouldering that headache at the backend with their latest shipment of GPUs and other accelerators.

Hardware has become software in the software-defined post-apocalyptic world of continuous integration and always-on executables.

Memory has also evolved…

Today, we can say that memory has become “part of the logic flow” of modern enterprise systems, but what do we mean by that?

If we remember that everyone is adopting AI agents across their organisations and agentic services are now dovetailing with generative and predictive AI to form a new holy trinity of functionality efficiency, we should also remind ourselves that AI agents are essentially digital representations of human employees.

Because human employees need a few key cognitive capabilities to be productive, we can centralise on the fact that one of those capabilities is the ability to plan/reason/form language (i.e the decision-making output that comes from the brain cortex in humans and from the use large language models (LLMs) in agents) and other essential skills.

The most fundamental skillset to highlight in the context of this discussion then is the ability to recall specific true facts – in context – in order to ground that intelligence in reality… that this is how we should define memory today if we are looking for a biological or neurological metaphor to illustrate how technology has shifted.

A key layer for agentic AI

Execs at Pryon, take the subject of memory pretty seriously.

The company is known for its Enterprise Memory Layer that delivers AI agents & apps the information that they need to be trusted & productive. Execs at the organisation say that the way contemporary AI systems are using memory at the core is a key part of why so many AI pilots “die on the vine” and never make it past the prototyping stage.

Most post-mortems & think pieces on failed AI projects blame model quality or user ‘learning curves’, but says Pryon president & CEO Chris Mahl, the real culprit is the messy nature of knowledge work based on some key factors detailed below:

- Stale & scattered documentation that users must navigate based on their own intuitive understanding.

- Tribal know-how trapped in Slack threads and AI chat logs

- Best practices that never make it into a searchable system

As detailed just last month on the Computer Weekly Developer Network here, context is paramount for AI systems. We know that AI agents, while powerful in many ways, aren’t quite as adept as humans. They require clean and current context, or they simply cannot perform. When context is missing, even the most powerful model feels like a toy… and users abandon it.

LLMs: the wrong unit of intelligence

“Treating the model itself as the ‘product’ is like hiring a PhD rocket scientist and refusing to hand over the blueprints. Intelligence is a systems problem,” explained Chris Mahl, president & CEO Chris Mahl, of Pryon. “Models supply language and reasoning, but memory supplies the facts, workflow history, user preferences, business rules etc. i.e. the ‘contextual things’ you need your AI to actually know to be productive.”

In other words (he says) raw LLMs know how to speak; memory tells them what to say.

“Ask industry leaders what they want next from GPT-6. The likely answer is better memory. The backlash to GPT-5’s launch that wiped 4o’s memory clearly demonstrated that context & the ability to deliver it is indeed the main driver of user experience for GenAI,” said Mahl.

He reminds is that as one user said, “[GPT 4o] was like working with a colleague who already knew how I wanted things done. Now it’s been replaced and the new one doesn’t get me.”

The user thought that this means we’re looking at a case of workflow interruption, not welcoming a missing therapist.

“For consumers, memory means an assistant that remembers your anniversary gift idea from three months ago or your fitness goals from January. Enterprises, however, need assistants and agents that know what project you’re working on, what best practices are for your organisation and your role, where the most up-to-date version of that one memo is, and more. AI can then pull select insights and data from different documents, decks, and spreadsheets to form a complete understanding of an organisation and deliver precise, trusted answers and actions that actually make money and save time.

The team at Pryon are adamant about the fact that memory modules inside of Large Language Model vendors are “robust and powerful” enough in their own sense, but they tie a software stack, instance app or other entity to that model… even when they may no longer be state-of-the art (or the prices of the LLM gets hiked after a few years), meaning that inescapable vendor lock-in can occur.

Beware, organisational context hostages

“This drives organisations into a usage model where they effectively become an organisational context hostage. You [the business] – not OpenAI, Anthropic, or Mistral – need to own your intelligence,” insisted Mahl. “At the same time, early dedicated memory vendors have emerged, sewing together a variety of open-source toolkits to let developers build agents & apps that are able to incorporate stateful memory largely via chat history.”

Unfortunately, he says, these systems struggle with accuracy, especially as we introduce the real-world complexities of massive scale, different modalities of data, different source connectors, different file types, different permissions and security requirements, etc.

Mahl details the technical validation here and says that these systems are “great tools for hobbyists” building proofs of concept with tightly confined user bases, usage and context complexity… but they are not equipped to handle the needs of serious organisations with serious use cases.

Inside Enterprise Memory Layer

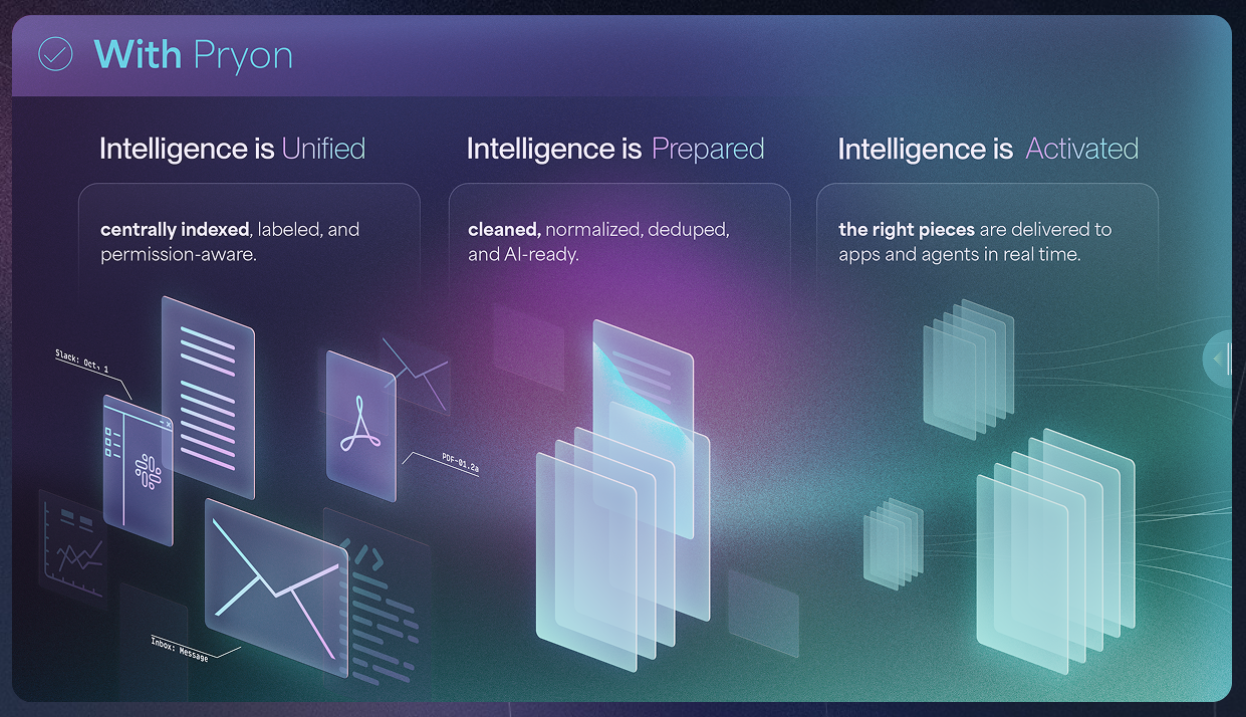

He talks of Pryon’s continuously refreshed enterprise memory layer, which he claimed “bridges the context gap” as it ingests and enriches every source of truth: documents, tickets, sheets, emails, chats etc. It also preserves role-based security and compliance requirements, it delivers pinpoint answers and actions (not generic text) and it allows a business to “own its own intelligence” rather than rent it from a model vendor

“We have converted stalled pilots into production assistants, applications and agents across Fortune 500 companies and federal agencies where accuracy and security are non-negotiable,” enthused Mahl. “If your team’s AI effort is marooned in proof-of-concept purgatory, the blocker isn’t the model you’re using, it’s the missing institutional memory that should be feeding it. Equip your agents with a purpose-built enterprise memory layer and pilots turn into production workhorses that pay for themselves.”

In search of additional insights, the Computer Weekly Developer Network (CWDN) put a couple of qualifying questions to CEO Chris Mahl to take him to task.

Deeper dive

CWDN: How much architectural inertia is needed to push already-deployed systems that have been engineered without what you see as the requisite level of freedom to tap into enterprise memory layer services?

CWDN: How much architectural inertia is needed to push already-deployed systems that have been engineered without what you see as the requisite level of freedom to tap into enterprise memory layer services?

Mahl: Not much. Think of Pryon’s Memory services more is as an add-on than a re-platform, this means it can offer the following functions:

- Connect where data already lives i.e. pre-built connectors for SharePoint, Box, Amazon S3, Confluence, Google Drive, Salesforce Knowledge, ServiceNow and other common repositories let teams ingest content without moving or re-formatting it.

- Perform fast, governed rollout. We have deployed to complex federal environments in as little as two weeks while still honouring document-level ACLs and IL 2-6 security controls.

- It runs where you need it i.e. Pryon can run in all major private and public clouds, on-premises or air-gapped environments, so no core service has to be torn out or rebuilt.

- The API layer, not a new front-end, which means existing chat-bots, agent frameworks or UIs can call Pryon through REST APIs, or embed its UI widgets; no “big-bang” UI migration is required.

In practice, customers start by pointing a single workflow (often a service-desk or knowledge-search use-case) at Pryon, then extend to other teams once they see the uplift. That incremental path keeps architectural inertia (and political friction) low.

CWDN: What is the intersection point between your institutional memory approach and the drive to platform engineering with self-service developer tools (such as showcased in Internal Developer Platforms) that shoulder a large amount of Ops-level infrastructural provisioning services?

Mahl: Platform Engineering thrives on abstractions: providing developers with easy interfaces to complex infrastructure. Pryon’s platform acts as the highly abstracted, Ops-level managed service for enterprise knowledge.

This offers the following advantages:

- IaaS Abstraction – Developers don’t worry about connector maintenance, ETL pipelines, or vector database management. They consume “knowledge” as a single, performant API.

- Ops-Level Provisioning – Pryon automatically handles the complex Ops tasks of data transformation, vectorization, indexing, and updating (change data capture) across heterogeneous sources.