Inside D-ID’s real-time AI avatar technology

Artificial intelligence has already learned to read, write and reason. In 2026, it’s learning to look, listen and respond in real time. The next frontier for human–computer interaction is visual.

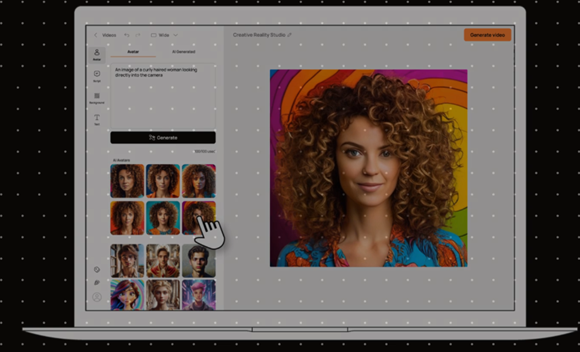

Tel-Aviv-based D-ID works in this space.

The company recently expanded its capabilities through the acquisition of Berlin-based Simple Show to now offer what is being called “photorealistic digital avatars” that can listen, think and respond to user interactions in real time.

Working at sub-200 millisecond latency and generating video at 100 frames per second (FPS), the system enables a form of interaction that is said to feel less like talking to a machine and more like conversing with a person through glass.

Frame-rate barrier

At the heart of this technology is a new “diffusion-animation inference pipeline” optimised for GPU batching and parallelism.

“Where traditional pipelines generate frames sequentially, D-ID’s approach runs multiple stages at once, producing video at 100 FPS, roughly four times faster than playback speed,” said D-ID, CTO and co-founder of D-ID Eliran Kuta. “That overhead provides a vital performance buffer. By generating frames ahead of time, the system smooths motion, reduces jitter and allows dynamic adjustment of stream quality. The entire process, from audio input to rendered avatar response, completes in under 200 milliseconds, thanks to the team’s decision to parallelise each part of the workflow rather than chain them serially.”

Speech recognition (ASR), language generation (LLM), text-to-speech (TTS), facial animation and video encoding all run concurrently, each on its own GPU thread. The result is continuous, conversational responsiveness that approaches real-world dialogue speed.

From diffusion to motion fields

Kuta explains that instead of reconstructing full 3D facial meshes, D-ID’s neural rendering stack combines viseme-to-frame transformers (an advanced AI model using vision transformer architecture to generate realistic video frames (such as mouth movements) from speech-related viseme data (visual mouth shapes for sounds like ‘ooh’, ‘ah’, ‘eek’, ‘er’ and so on) with motion-field diffusion models. This hybrid allows for micro-expressions, the quick eye blinks and subtle muscle shifts that make faces believable, without the computational load of 3D geometry.

NOTE: A viseme is any of several speech sounds that look the same, for example when lip-reading.

“Lip-sync precision is maintained through CTC-based audio encoding that aligns phonemes to timecodes and feeds them directly into motion-vector generation. The result is lip movement within 30 milliseconds of speech output, tighter than broadcast-grade sync requirements,” explained Kuta. “This design choice shifts the emphasis from graphical modelling to temporal coherence, not just how good an avatar looks, but how smoothly it reacts in time with the voice driving it.”

But generating high-fidelity, low-latency video is only half the battle. Running it for thousands of users simultaneously demands a carefully orchestrated infrastructure stack.

Engineering for real-time scale

To achieve the performance needed D-ID’s own streaming API employs HTTP/2 and WebRTC to manage bi-directional video and audio flow. Underneath, Kubernetes and gRPC microservices coordinate GPU workloads across Azure cloud clusters, using autoscaling policies driven by latency telemetry.

Kuta says that each GPU node reuses tensors and applies activation checkpointing to manage memory efficiently, a crucial detail at 100 FPS, where even brief overflows can interrupt a live stream. Meanwhile, chunked inference ensures that if a single frame fails to render, the system continues seamlessly without full regeneration.

The result is a cloud-native architecture capable of sustaining continuous generative video sessions at scale, something that has typically required bespoke on-prem setups.

Developer-first design

Despite the complexity under the hood, D-ID’s platform is built to be developer-friendly. The API exposes both REST and WebSocket endpoints, while lightweight SDKs in Python and Node.js handle token authentication, session setup and WebRTC negotiation.

“Developers can stream an audio buffer and receive real-time video chunks or route video directly via RTMP for broadcast, with minimal integration overhead. Observability comes baked in: Prometheus metrics expose latency profiles, dropped-frame rates, GPU utilisation and stream uptime for precise debugging,” said Kuta.

The intent here is clear: to abstract away the rendering pipeline so developers can focus on user experience rather than graphics engineering.

Accelerated diffusion & temporal stability

Behind the scenes, the company has fine-tuned denoising diffusion implicit models (DDIMs) for facial motion prediction, reducing inference steps from 50-plus down to eight to ten per frame sequence.

To prevent jitter between frames, Kuta explains that D-ID uses cross-frame attention and motion-latent smoothing, techniques that maintain expression continuity across time. Developers can even modulate emotion intensity through latent-space interpolation, giving avatars adjustable personality and tone, expressive without drifting into caricature.

Emotionally intelligent digital humans

This kind of controllability promises to move the technology beyond fixed characters and toward dynamic, emotionally intelligent digital humans, claims the D-ID team.

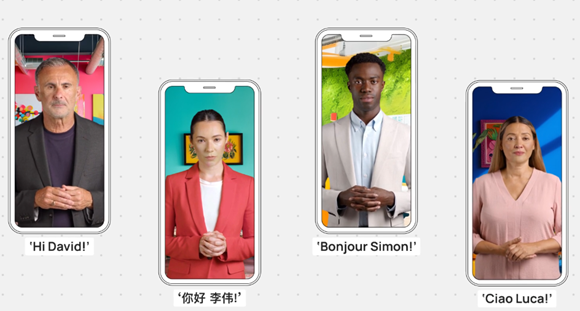

“The significance of this achievement lies as much in architecture as in aesthetics. D-ID treats avatar rendering as a modular visual layer that can integrate atop any conversational AI stack like OpenAI, Anthropic, ElevenLabs, or otherwise,” said Kuta. “By separating the ‘face’ of AI from the underlying logic, we effectively introduce a new interface paradigm: the Visual Natural User Interface (VNUI). Where previous generations of AI focused on text and speech, this one adds facial nuance and emotional presence, arguably the most human form of communication.”

Until recently, photorealistic generative video belonged to research papers and demos. D-ID’s work can be said to be more “operationally complete” i.e. showing that this can run as a live cloud service. The implications span customer experience, healthcare, training and possibly broadcast media.

A new feel for AI

“For enterprises, it means avatars can now act as front-line digital representatives capable of explaining complex topics, onboarding new users, or providing multilingual support, all with traceable logs and compliance-ready data. For developers, it means access to infrastructure that once required deep expertise in computer vision and GPU tuning,” said Kuta. “This represents not just a performance milestone but a new model for how AI can feel.”

The lesson here is less about any one company and more about a shift in computing design. Generative video has become a systems problem, one that blends model efficiency, GPU memory management and cloud-scale orchestration into a single, responsive pipeline.

If the next generation of AI is judged by how naturally it can look, listen and respond, D-ID’s work may stand as one of the first proofs that photorealism and real-time performance can finally coexist.