Amazon Bedrock AgentCore tools-up for agentic AI software development

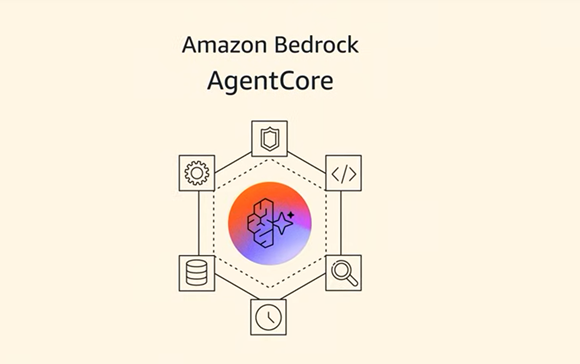

AWS used its re: Invent 2025 conference to announce new developments in Amazon Bedrock AgentCore, its platform for building and deploying agents.

In a world where we all want to know where AI guardrails are being laid down, new and updated features such as ‘Policy in AgentCore’ allow software application development teams to set boundaries on what agents can do with tools.

AgentCore Evaluations enables software engineering teams to understand how their agents will perform in real world live deployment scenarios.

There was also news of an enhanced memory capability so that enables agents can “learn from experience” and improve over time.

AWS reminds us that while the ability for agents to reason and act autonomously makes them powerful and potentially very useful, businesses must establish tough controls and guidelines to create secure functional systems that can work to ensure unauthorised data access does not happen and create inappropriate interactions that could impact system-level mistakes.

As we know, even with prudent prompting, agents make real-world mistakes that can have serious consequences.

“We are launching Policy in Amazon Bedrock AgentCore, which helps organiations set clear boundaries for agent actions. Using natural language, teams can now give agents boundaries by defining which tools and data they can access, what actions they can perform and under what conditions. These tools could be APIs, Lambda functions, MCP servers, or popular third-party services like Salesforce and Slack. To ensure agents stay fast and responsive, Policy is integrated into AgentCore Gateway to instantly check agent actions against policies in milliseconds,” noted AWS in a product statement.

The company says that its work is designed to ensure agents stay within defined boundaries while operating autonomously.

The natural language-based policy authoring provides a more accessible and user-friendly way for users to create fine-grained policies by allowing them to describe rules in natural language instead of writing formal policy code.

For example, a simple policy like “Block all refunds from customers when the reimbursement amount is greater than $1,000” can be implemented and enforced consistently, following Amazon’s “trust, but verify” principle. This will allow agents to operate autonomously while maintaining appropriate oversight.

AI agent behavioural analysis

Working on software metrics with AI agent differs from practices that might normally be undertaken when working with traditional software i.e. it requires complex data science pipelines, subjective assessments and continuous real-time monitoring, a challenge that compounds with each agent update or model change.

“AgentCore Evaluations simplifies complicated processes and eliminates complex infrastructure management with 13 pre-built evaluators for common quality dimensions such as correctness, helpfulness, tool selection accuracy, safety, goal success rate and context relevance. Additionally, developers have the flexibility to write their own custom evaluators using their preferred LLMs and prompts. Previously, this required months of data science work to build just the evaluation systems. The new service continuously samples live agent interactions to analyse agent behaviour for pre-identified criteria like correctness, helpfulness and safety,” detailed AWS, at this year’s event.

Software application development teams can set up alerts for proactive quality monitoring, using evaluations both during testing and in production.

Episodic functionality, agentic learning

AWS also says that AgentCore Memory provides a critical feature, allowing an agent to build a coherent understanding of users over time. AgentCore Memory is now making a new “episodic functionality” generally available that allows agents to learn from past experiences and apply those insights to future interactions.

Through structured episodes that capture context, reasoning, actions and outcomes, another agent automatically analyses patterns to improve decision-making. When agents encounter similar tasks, they can quickly access relevant historical data, reducing processing time and eliminating the need for extensive custom instructions.

AWS provided an arguably slightly screwball example of an agent that books airport transportation 45 minutes before the flight when a passenger is travelling alone, but remains aware (three months later, when the passenger is travelling with kids) to provide more time.

While most of us would remain aware of core travel details before a flight and override any agentic mistakes, perhaps we will become more reliant on these services in the future and actually appreciate this kind of functionality… it’s early days all round, so let’s keep working with the agents to see what happens.