AI workflows - Snyk: Embedding developer-centric security into the AI pipeline

This is a guest post for the Computer Weekly Developer Network written by Randall Degges, head of developer relations & community, Snyk.

Degges writes in full as follows…

AI-powered workflows play an increasingly important role in the software development lifecycle. We’re starting to see orchestrated pipelines, complete with agentic models, vector databases and intelligent automation platforms handling more than just code generation.

Yet as AI models and services become integral to modern dev workflows, an entirely new threat is emerging. It’s dynamic, often opaque and dangerously under-guarded.

The security paradox

AI development offers speed and scale – two tempting benefits that come with serious trade-offs. The same tools that auto-suggest fixes or compose new app logic can also introduce security vulnerabilities or amplify bias and misconfiguration. Ironically, many organisations are embracing AI to boost productivity without fully understanding the security implications.

The paradox is stark: developer trust in AI is high, while scrutiny is low.

Snyk’s State of Open Source Security report revealed that nearly 80% of developers believe AI-generated code is more secure than code written by humans. Even more concerning, 84% apply the same level of scrutiny to both human and AI-suggested open-source packages. This represents a security blind spot that’s growing as AI becomes embedded into core dev workflows.

AI workflows are not secure by default

AI tooling is becoming increasingly intuitive and accessible. Tools like GitHub Copilot, LangChain and various orchestration platforms now seamlessly slot into the IDE or CI/CD pipeline. Under the hood, these workflows can touch everything from infrastructure-as-code (IaC) to runtime environments and sensitive training data.

Many of these AI-infused pipelines lack essential guardrails. Whether it’s a pre-commit hook that skips vulnerability scans, a misconfigured LLM plugin that exposes secrets, or poor access control across model orchestration layers, oversight is often lacking.

AI workflows can also create widespread risks. For instance, an LLM that autocompletes insecure dependency declarations or misconfigures cloud infrastructure can trigger vulnerabilities at scale. And because these models are trained on vast, unvetted datasets, they may hallucinate fixes or include flawed patterns, potentially leaving developers with a false sense of assurance.

Securing the AI workflow

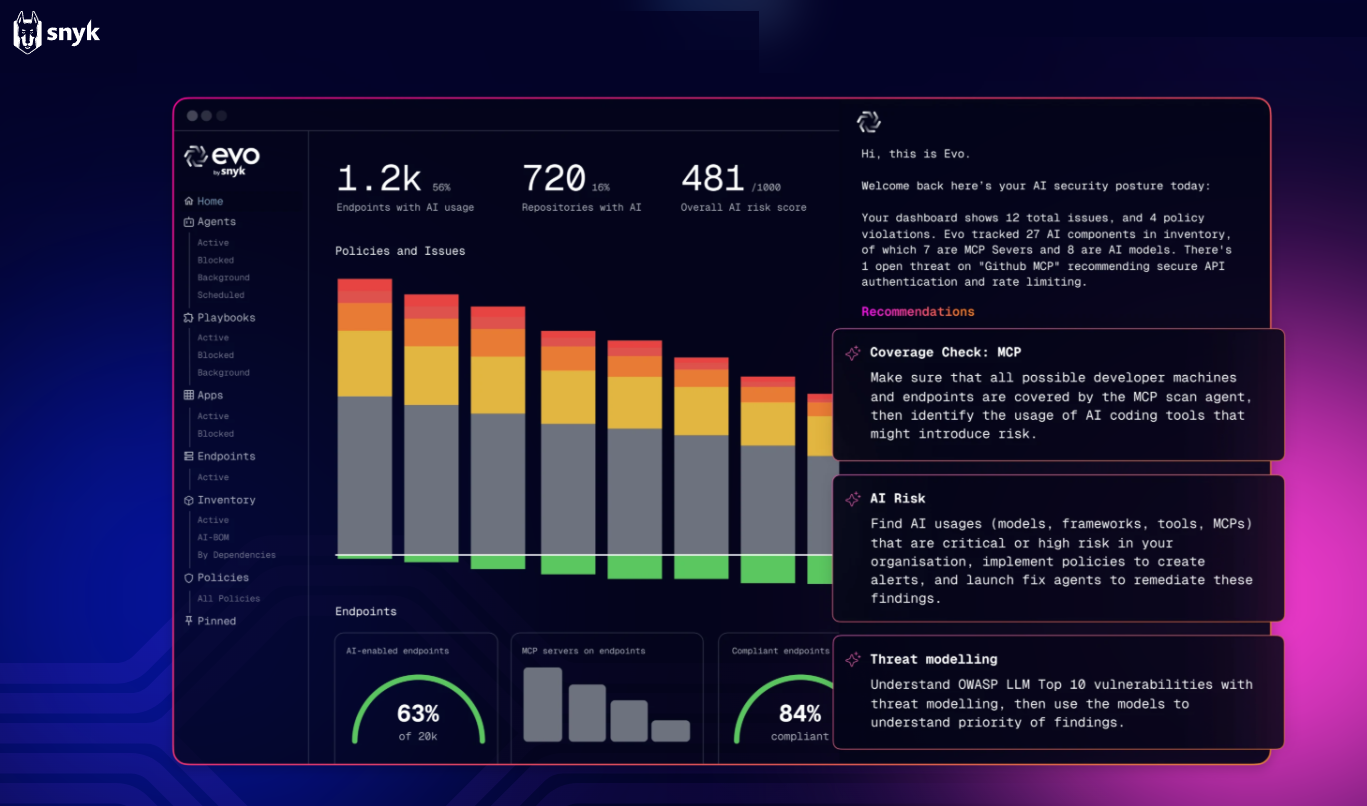

The good news is that there’s no need to reinvent the wheel to secure AI workflows. We just need to extend well-established DevSecOps principles to new AI-driven contexts. At Snyk, for example, we advocate embedding developer-first security checks directly into every phase of the AI pipeline.

This should include pre-commit scanning, where LLM-suggested code should undergo the same vulnerability, license and misconfiguration checks as human-authored code. It should also include policy enforcement, with a need to define and enforce policies that govern the use of third-party models, packages and API integrations.

Organisations need to take model governance seriously – tracking versions, curating training datasets and setting clear decision boundaries with discipline. It’s equally important to build in monitoring and alerting, so unusual model behaviour is caught quickly, particularly when systems are running autonomously.

These safeguards work best when baked in from day one, with development and security teams working closely together.

The hybrid AI security approach

A hybrid model combines the strengths of symbolic AI with today’s large language models. Generative tools are powerful for spotting risky patterns or suggesting fixes, but they’re most effective when paired with rule-based engines and vetted security databases.

Tooling can flag when AI-generated code introduces outdated or vulnerable dependencies, for example, even if the code looks syntactically correct. Security platforms also help by keeping context across languages such as Python, JavaScript and Go, spotting risky logic or insecure patterns that might otherwise sneak through.

Security must become invisible

The goal is not to bog developers down with yet another tool. It’s about bringing AI-aware security into every decision point in the developer journey. By integrating security guardrails into IDEs, CLIs and pipelines that developers already use, we can reduce friction and boost adoption.

This is particularly important in an age of vibe coding and agentic development, where tools proactively write, test and deploy code, with a need for frictionless security that adapts to how developers actually work.

Overcoming misplaced trust

Perhaps the greatest risk in today’s AI workflows isn’t technological – it’s psychological. Developers are increasingly outsourcing decisions to tools that they trust without validation.

When an LLM suggests a snippet that “just works”, it’s easy to move fast and trust the output. But research has shown that AI-generated code often contains subtle flaws – flaws that might be missed unless rigorously tested and reviewed. And yet, our industry has not yet updated its peer review, testing or QA standards to accommodate this shift.

Organisations need to build awareness and process discipline around AI tooling, much like they did for open-source software adoption a decade ago. That means training teams to understand AI risks, putting checks in place for AI-generated content and making sure there’s clear accountability for where code comes from and how it’s reviewed.

The importance of security

AI workflow platforms offer immense promise, but without integrated security, they become risks wrapped in convenience. From supply chain vulnerabilities to hallucinated logic and model misuse, the threat is only growing.

To truly unlock AI’s potential in the workplace, it’s vital that we secure the entire pipeline. This means looking beyond code and also securing models, data and orchestration layers in between. After all, AI can never be truly transformative if we can’t trust it.