Modern development - Cohesity: Defining a new rhythm for the paroxysms of data schism

This Computer Weekly Developer Network series is devoted to examining the leading trends that go towards defining the shape of modern software application development.

As we have initially discussed here, with so many new platform-level changes now playing out across the technology landscape, how should we think about the cloud-native, open-compliant, mobile-first, Agile-enriched, AI-fuelled, bot-filled world of coding and how do these forces now come together to create the new world of modern programming?

This contribution comes from Ezat Dayeh in his role as SE Manager UK&I at Cohesity — the company is known for its capabilities aligned to tackle mass data fragmentation through data management, beginning with backup.

Dayeh writes as follows…

What 2020 has shown (given the Covid-19 pandemic, political upheavals and everything else) is that business processes need to be adaptable and flexible if they are going to survive the new ways of working and respond to changing consumer habits and the difficult economic challenges.

Software development cycles have always been pressured to deliver right-first-time production software fast, so dev teams scrum and sprint, but what often holds them back is the ability to access data they need because of infrastructure issues. Data is too often fragmented across a host of silos that can’t match the speed needed (and also costs too much) to give developers the tools to make sure their software is fit for purpose.

Testing times

Cohesity’s Dayeh: Say goodbye to monophasic development, say hello to the new mix.

What modern software development needs is an infrastructure that provides an agile, simple and risk-free development environment.

Test data, for example, is a critical part of the software development lifecycle. Development and test teams need enough quality test data to build and test applications in a scenario that reflects the business reality. Since it is a foundational element, changing this variable affects larger processes. Faster access to test data can directly speed up time to release. Higher quality and more realistic test data can reduce the number of defects customers encounter in production software.

There’s always a but (so here it is): traditional test data management infrastructure is misaligned with modern software development.

Monophasic development and monolithic applications have been replaced in favour of more iterative development predicated on microservices. The adoption of DevOps methodology and Agile development practices have evolved to support this trend. However, the underlying problem of data availability has largely remained unresolved.

The legacy approach of traditional test data management delays development and testing, giving that provisioning the relevant data itself can take days or even weeks. And it is a legacy issue – it’s a hard, technical problem to be able to provision data to multiple teams, across multiple geographies, at an ever-growing pace.

Cloud to the rescue? Ah, no.

But doesn’t the public cloud overcome legacy issues?

To an extent the public cloud has accelerated the pace of innovation by bringing elasticity and economics while reducing time to market for new applications. However, it is not a panacea.

Public cloud environments carry over operational inefficiencies from their legacy on-premise environments and introduce several new challenges. For most organisations, their data footprint straddles multiple public clouds and on-prem environments. So test/dev requires data mobility between environments. The advent of dev/test in the cloud adds additional roadblocks, including the misalignment of formats among on-premise and public cloud VMs. This schism leads to manageability strains, presents an impediment to application mobility and often is accompanied by dramatic cost challenges.

A way of speeding up test/dev is to repurpose backup data as test data.

This means you can back up one server or databases, instantly make a clone of it without consuming extra storage and use that clone to help develop applications and software. It doesn’t require additional infrastructure and the management overhead is part and parcel of the backup.

This gives teams near-instant and self-service access to up-to-date and zero-cost clones, increasing the speed, quality and cost-effectiveness of development. Or to put it more succinctly: for faster software development, IT teams should look for software solutions that provide instant zero-cost clones.

Data management has a special role to play in terms of modern software application development and it can help define a new rhythm for the paroxysms of data schism.

Can you get with the beat?

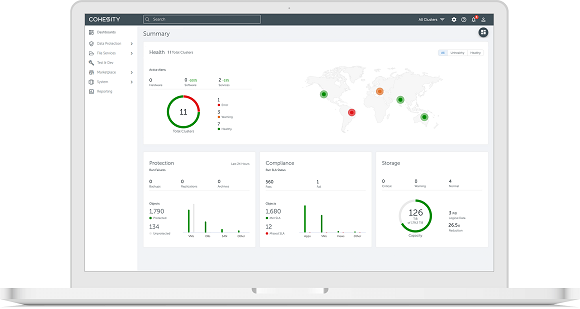

Cohesity promises to consolidate data management silos with a single, web-scale solution. (Approved Image Source: Cohesity).