Dynatrace Perform 2024: Cloud-native tool consolidation via unified observability

Dynatrace Perform has grown.

When a company has to move conference locations in order to accommodate a bigger number of attendees, it’s generally a sign of positive growth and expansion.

Now very much a platform company (yes, developers working in cloud engineering and data science can build applications on-top-off Dynatrace, so that they run in and on the platform for observability management control), the company staged Dynatrace Perform 2024 and the Computer Weekly Developer Network (CWDN) team tuned in to download the uploads, so-to-speak.

In its current form, Dynatrace refers to itself as a unified observability and security platform company with AI-powered capabilities for data analytics and automation.

Terminologies that (arguably) need defining, clarifying and validating, the way Dyntrace talks about unified observability means that we’re looking at cloud-native observability across data, software code, connection points such as APIs, fragmented-yet-connected disparate computing elements and volumes such as containers… and of course the network connections that interweave between all of the above and more.

Concurrent AISecOps

As a secondary point of unification, we have unified security via the platform (of course, security doesn’t come second, it comes concurrently, firstly and/or it works symbiotically with unified observability with one discipline feeding the other) here to also work with.

Why does unified security matter?

As explained Bernd Greifeneder, CTO and founder of Dynatrace in pre-show meetings with the CWDN team, the reason is that so many enterprise firms have upward of 100 security tools in their IT department and that’s simply not effective, efficient, functional or indeed securely safe.

Dyntrace Perform 2024

Onward to the conference itself.

After the customary super-ebullient welcome from British emcee/host Mark Jeffries, Dynatrace CEO Rick McConnell and Dynatrace chief transformation officer Colleen Kozak presented a plenary session entitled ‘a vision for cloud done right’ on the event main stage.

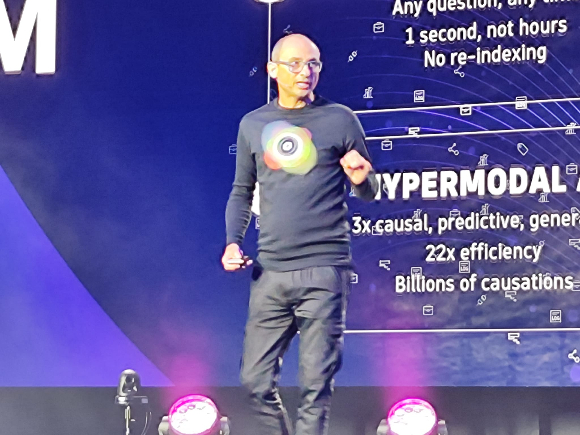

Just to cross off how these sessions were labelled, McConnell then followed with Greifeneder and Dynatrace SVP of product management Steve Tack to present ‘the Dynatrace difference’ before leading into sessions dedicated to ‘overcoming cloud challenges’ with Dynatrace chief customer officer Matthias Dollentz-Scharer and Dynatrace’s chief technical strategist Alois Reitbauer.

Tectonic shifts & megatrends

Talking about the tectonic shifts that he sees happening in business, CEO McConnell pointed to some IT megatrends starting with what he calls out as cloud modernisation.

“The opportunity in the cloud now is enormous (although there is massive complexity and a huge increase in data with an explosion of tools to also manage), we have the opportunity to reduce costs and increase application performance to such a degree – if you look at the major three hyperscalers and what they are bringing to market, they have experienced huge growth. Match this with the ubiquitous proliferation of generative Artificial Intelligence (AI), we can see how immense gains in productivity are now possible. AI is now becoming essential – mandatory even – to business success. We must also evolve threat protection as we now manage businesses in the modern cloud age,” said McConnell.

Amidst all the transformation happening right now, McConnell openly apologised for being somewhat self-serving and saying that, from his team’s perspective and for many others… he sees observability as being key to making things happen effectively in modern cloud-native deployments.

Contextual analytics hyper-modal AI, automation

The core approach from Dynatrace now breaks down into three major cornerstones.

- Contextual analytics based on the Dynatrace data lakehouse Grail – this is not about correlation, it is about causation.

- Hypermodal AI is also key, generative AI is important but is (of course) only as good as the underlying dataset that it leverages and Dynatrace says that with Grail, the company is able to deliver data that is determistic and aligned for defined mission-critical use.

- Automation – being able to trust the answers coming from predictive analytics and self-remediate to a point where enterprise software works perfectly.

In terms of the total Dynatrace platform offering (and customer subscription), Tack repeats that word the company loves to use – unified… and said that through Dynatrace, organisations can bring their security teams into the DevOps practices.

“Thinking about how you make cloud modernisation happen and how companies can embrace data and operationalise these services, we all want to innovate faster and that can’t just be done by people… it’s a question of people, processes and technology. This means it’s all about looking at how the business works and making sure that nobody works in a silo and all teams start to be able to collaborate in a cross-functional way so that firms can maximise the way they use technologies and automate business processes for success,” said Colleen Kozak, with her transformation job title clearly making her well-suited to this kind of commentary.

A brief customer session followed presented with Chris Conklin from US-based TD Bank in which the spokesperson explained how observability has helped the firm reduce ‘customer irritants’ in its operations.

Conklin said that his IT team had to typically jump from tool to tool to tool in order to try and resolve customer issues – this of course also involved keeping up a lot of different maintenance tasks associated with each tool, dealing with the time taken moving between tools and users even knowing which tool to look into in the first place at any one time. The central message (which there are no prizes for guessing here) is that observability has helped the bank move towards its ‘dream’ of a single pane of glass for all these functions.

Green coding for Earth

Matthias Dollentz-Scharer dove into cloud cost optimisation functions in the end keynote session, explaining how his team would look for under-utilised machines and use the Dynatrace platform to bring forward a more efficient use of cloud computing. This of course helps organisations reduce their carbon footprint.

Showcasing work carried out with customer Lloyd’s banking group, the speakers detailed work carried out at the datacentre level, the host and container level… and also at the ‘green coding’ level i.e. work to optimise the source code of applications to reduce the workload placed upon CPUs by making sure it is designed and written in the most efficient software programming language possible.

Although clearly a long-term initiative (as no enterprise will want to rip and replace), by starting with green coding initiatives targeted at simply refactoring and optimising one single API, the aggregate effects of this type of initiative should spell good news for the planet.

Previewing the afternoon sessions, the Dynatrace team thanked the morning keynote attendees and wrapped up day one. When it comes to creating better cloud services… as they say in observability circles ‘you can’t measure what you can’t see’ so the need to look inside clouds clearly remains fairly critical.

Dynatrace’s Greifeneder: Truly ‘pumped’ about the new platform updates & enhancements.

Tack (right) and Greifeneder (left).