AI Workflows - AODocs: Fix permissions now, before the bots find them

This is a guest post for the Computer Weekly Developer Network written by Stéphane Donzé, CEO of AODocs.

AODocs provides cloud-native document management and governance solutions that help enterprises control, secure and govern their content across Google Workspace, Microsoft 365 and other cloud platforms.

Donze writes in full as follows…

Companies are accelerating AI workflow deployments across their enterprises.

But as businesses rush to implement copilots, AI search and agentic systems, they are running into a big problem. These systems accelerate the findability/searchability of data within AI workflows… and, they are now capable of instantly surfacing years of overshared, stale and improperly governed data, which was previously hidden.

As a result, organisations must inject governance into their company documents and data permissions before AI workflows reveal the full extent of security vulnerabilities.

Agents access the inaccessible

Copilots and similar AI tools respect existing permissions.

This often results in what security teams are calling the “holy s***” moment – when AI suddenly surfaces files that were technically “open” for years but practically “hidden” by obscurity.

In fact, research suggests that 99% of organisations have sensitive data exposure that AI can easily surface. Also, one in ten sensitive files is exposed org-wide in typical SaaS environments. As GenAI usage explodes, the probability of accidental exposure multiplies exponentially.

The fact is that traditional information protection relied on users not knowing where to look or lacking search sophistication to find sensitive content. AI assistants obliterate this protection overnight.

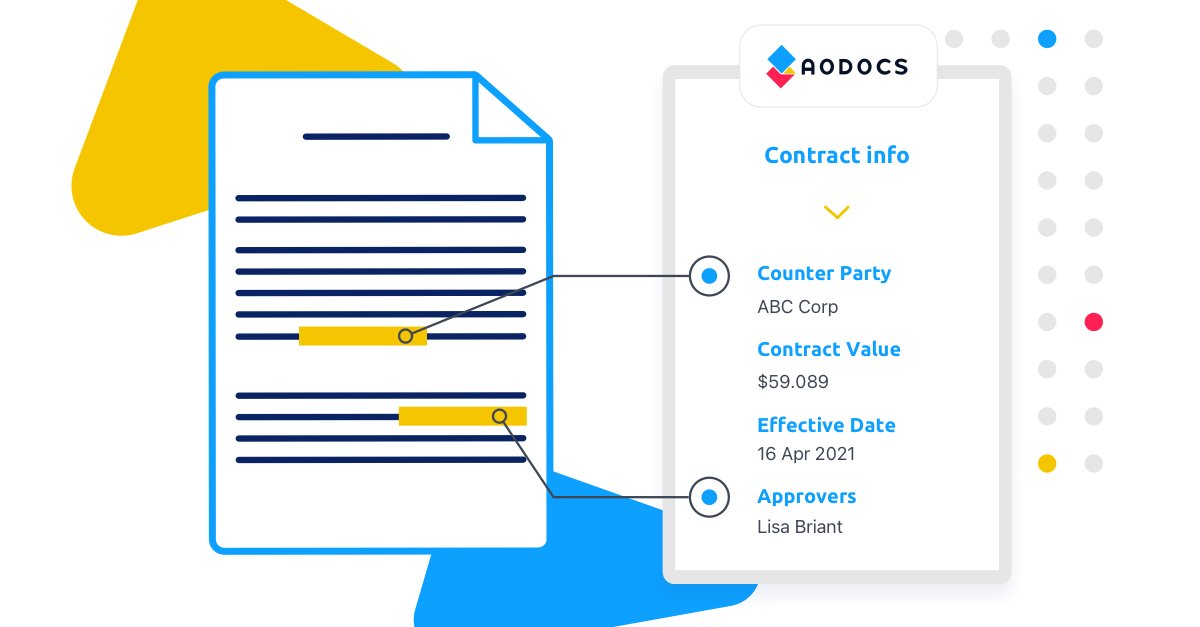

Semantic search combined with intelligent agents transforms “I can’t find the Johnson acquisition documents” into “show me everything about the Johnson acquisition” – instantly retrieving confidential files that were always accessible but never discoverable.

A governance debt problem

Every organisation carries governance debt – years of accumulated “everyone” links, org-wide groups, stale HR and finance shares, ghost user accounts and orphaned collaborative spaces.

These permission patterns create perfect conditions for AI-driven data exposure:

- Financial documents shared to “anyone with the link” during board preparations

- HR folders accessible to company-wide groups from legacy organisational structures

- Orphaned project sites containing competitive intelligence

- Former employees’ accounts with lingering access to confidential repositories

Independent research confirms the blast radius. While most organisations believe their sensitive data is well-protected, AI-powered discovery reveals a different reality – one where confidential information is routinely accessible to far more people than intended.

Building guardrails before go-live

Before deploying any AI agents, enterprises need focused remediation. Industry leaders are adopting a standardised protocol.

- Run comprehensive data access governance reports that map all “everyone” access patterns across SharePoint, OneDrive, Teams, Box and Salesforce. The goal is visibility into current exposure footprint—most organisations are shocked by what theyfind.

- Apply sensitivity labels and encryption systematically. HR, legal and finance content gets locked down by default since these departments generate the highest-risk content for inadvertent AI disclosure.

- Convert legacy “anyone with the link” shares to specific permissions and remove org-wide groups that made sense in 2018 but create massive exposure today. Close ghost accounts and revoke dormant access grants that accumulate over years of employee turnover.

- Enforce retention policies for must-keep documents while defensibly deleting “keep forever” content sprawl to reduce ongoing liability.

- Start AI deployments with least-privilege user cohorts using ring-based rollouts. Test prompts that commonly surface sensitive content to validate controls actually work before broader deployment.

Governance as a living system

One-time cleanup isn’t sufficient.

Organisations must embed governance directly into their AI workflow platforms through continuous data security posture management signals, DLP policies for GenAI traffic, automated joiner-mover-leaver processes and site lifecycle management rules. If not, they risk exponentially higher rates of policy violations and data exposure incidents as GenAI adoption accelerates.

Sensitive files with org-wide “everyone” access should drop below 1%. Unlabeled sensitive repositories in HR, legal and finance should hit zero. Ghost accounts with access to confidential content must be eliminated entirely. Track the mean time to revoke risky shares and monitor the reduction in GenAI DLP violations as proof that governance workflows are working.

The answer is not to slow AI adoption but to build governance into the fabric of these systems. As organisations bet heavily on AI workflows to drive efficiency, the stakes for getting permissions right have never been higher.