Why water usage is the datacentre industry’s dirty little secret

In this guest post to mark Sustainable Development Week, Arnaud de Bermingham, president and founder of cloud provider Scaleway sets out why the datacentre industry must come clean about its water usage habits

Global warming is forcing us to question the techniques ordinarily used to cool datacentres, as they are responsible for a significant part of the digital economy’s energy consumption. Reducing power consumption is our industry’s number one priority, followed closely by water conservation and waste management, both of which remain taboo.

To put this into perspective, a single datacentre can use power equivalent to a small city and requires a significant amount of water for cooling. As an extreme example, a 15 megawatt datacentre in the USA can use up to 360,000 gallons of water a day and, more often than not, the water is stored in cooling towers, a reality that is characterised by considerable environmental and health risks.

And that is why – today – I’m calling for a ban on water cooling towers by datacentre operators and more transparency from industry players.

Cooling towers: Why they need to disappear

Cooling towers are inefficient, unsafe, and unnecessary in modern hyperscale data centres. “Dry” technologies, like the dry coolers widely used in European datacentres, have been around for decades.

With the industry facing scrutiny over carbon emissions, water scarcity is poised to be the next major resource to be publicly examined. It is therefore imperative that our customers have a better understanding of the pressing issue the data centre industry is facing with regards to water consumption.

How did we get to a point where it is acceptable for a datacentre to consume often millions of gallons of water to the detriment of society and agricultural crops? It’s a major blot on the industry’s copybook and is no surprise that data centre operators don’t like to talk about it.

Demand for data is on an upward trajectory like never before, with projections showing overall data usage will rise by 60% by 2040, producing 24 million tonnes of greenhouse gases every year.

But whilst we focus on energy consumption, water usage slips under the radar.

What are cooling towers?

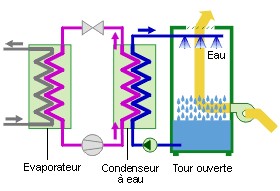

Liquid can be cooled in two different ways.

The first way is to use a closed circuit with an exchanger through which air passes. This is what is known as dry cooling. It’s the same principle as your car’s radiator: there are two separate circuits – one for the air, and one for the water which needs cooling.

The two circuits are never in direct contact, and they both pass through the same exchanger. Because they are never in direct contact, no water is consumed by the system. This is the process that Scaleway has been using for around 15 years in most of our datacentres.

The second technique involves spraying water from the top combined with an air flow going in the opposite direction (a cooling tower). The water partially evaporates, cools down and exchanges its heat with the air. This type of system is less expensive to purchase but uses large quantities of water. Also, as the hot water and the air are in direct contact, it’s the ideal breeding ground for bacteria – thus requiring the use of disinfectants which end up evaporating into the air.

Many datacentres use cooling towers because they are less expensive to purchase than closed circuit alternatives, and they also take up far less space.

Cooling towers and the risk to public health

The Institut Pasteur estimates that between 8,000 and 18,000 people are infected by Legionella every year. In the USA, the Centre for Disease Control identifies cooling towers as one of the main sources of legionnaires’ disease. While in France, 18 people died and 86 were infected by a faulty cooling tower in the Nord-Pas-de-Calais area in 2003.

The public rarely hears about these types of cases, and they are seldom publicised even when lawsuits are involved because they are generally settled out of the public eye and involve confidentiality clauses.

Legionella has been recognised among the top three or four microbial causes of community-acquired pneumonia, and cooling towers remain the main cause of this disease.

To avoid the risk of a Legionella infection, most datacentres use chlorine and bromine-based chemicals and disinfectants, causing pollution and acid rain. Bromine is a nasty chemical that is highly toxic for organic systems and impacts the neuronal membrane. It has a toxic effect on our brains, and exposure to the chemical can result in drowsiness and psychosis amongst other neurological disorders.

All of this goes to show that datacentre operators have a responsibility to not only look after our planet by implementing truly energy efficient technologies, but to also protect the health of those living close to our sites by making conscious choices to use technologies that do not require cooling towers.

The on-going Covid-19 coronavirus crisis has shown us how dangerous respiratory illnesses can be, and how we should take steps to prevent them where possible.

France has banned cooling towers but their usage persists elsewhere. The rest of the world needs to ban cooling towers too. We simply don’t need them.

Cooling without the towers

Scaleway’s latest datacentre has been built with an environmentally conscious approach – even when we are hit by the biggest of heatwaves, we never turn on the air conditioning.

However, when it comes to the rest of the industry’s stakeholders, datacentre cooling techniques seem to be stuck in the last century. The industry has adopted a ‘copy paste’ approach using tried-and-tested structures that meet the unjustified specifications of major customers. And, despite our willingness to often invest sums as big €75m and €100m for improvements, as a sector we invest precious little of that money in energy-saving research.

We need to promote a far greater awareness of the challenges facing our industry and, in many ways, the change needs to come from customers. Thanks to technological developments, new equipment classes can withstand higher temperatures than previously possible, meaning that temperature requirements from customers need to be adapted to match and allow for more energy-efficient solutions.

Not only do customers need to turn toward more efficient practices in line with what their equipment can withstand, but investors need reassurance, and those writing specifications need to be better informed.

Sweating your datacentre assets

Our DC5 centre is different to most other datacentres. From the ground up, it is exceptionally energy and water-efficient. It also provides a significant financial edge for our clients, leaving outdated, environmentally heavy technology out in the cold.

We use an adiabatic cooling system – mimicking how the human body sweats to cool down. By evaporating a few grams of water into the air, a few hours per year, the air coming from the outside can be cooled by nearly 10°C.

We’ve known about this process since ancient times, and it allows us to maintain stable, optimal conditions for our precious servers – both those that belong to our customers and also to Scaleway’s public cloud.

Our process is incredibly straightforward. It works with standard computing equipment without the need for proprietary modifications. We have even built a climatic chamber to study the impact of all weather and humidity conditions on virtually all IT equipment available on the market today.

How does it work?

Ours is a unique design. With 2,200 sensors and measurement points, we can analyse an algorithm in real-time. This means that the datacentre adapts, self-regulates, and optimises its processes every 17 milliseconds so that every sensor gets exactly the right energy and cooling needed to function at maximum efficiency.

The water and energy savings of our system are immense. The system needs virtually no maintenance and uses no greenhouse gas refrigerants. In other words, it’s zero carbon.

By contrast, traditional cooling units require huge quantities of energy. They are also subject to technical limitations including unplanned downtime, spikes in energy consumption, and lower efficiency during heatwaves. It is mind boggling that we have reached a point where it is deemed acceptable for a datacentre to be using 30-40% of its total energy consumption for air conditioning in winter.

Out of the estimated 650 billion kWh of electricity consumed in 2020 by datacentres, at least 240 billion kWh is wasted on air conditioning.

It’s time to end this wasteful behaviour and rethink the way we build, design, and operate our datacentres. It can be done. We are the proof. It’s now time for the rest of the industry to get on board with the latest designs for operating clean and efficient data centres.