Qualys expands TotalAI to shore up AI & LLM model risk

Qualys has announced updates to its TotalAI service to secure organisations’ MLOps pipelines from development to deployment.

Users will now be able to test their large language models (LLMs), even during their development testing cycles, with stronger protection against more attacks and on-premises scanning powered by an internal LLM scanner.

A recent study suggested 72% of CISOs are concerned that generative AI solutions could result in security breaches for their organisations.

“As AI becomes a core component of business innovation, security can no longer be an afterthought,” said Tyler Shields, principal analyst at Enterprise Strategy Group. “Qualys TotalAI ensures that only trusted, vetted models are deployed into production, enabling both agility and assurance across organisations’ AI usage. This security helps organisations achieve their innovation goals while managing their risk.”

Qualys TotalAI is purpose-built for the unique realities of AI risk, going beyond basic infrastructure assessments to directly test models for jailbreak vulnerabilities, bias, sensitive information exposure and critical risks mapped to the OWASP Top 10 for LLMs.

Taking a risk-led approach, TotalAI not only finds AI-specific exposures and helps teams resolve them faster, protect operational resilience and maintain brand trust.

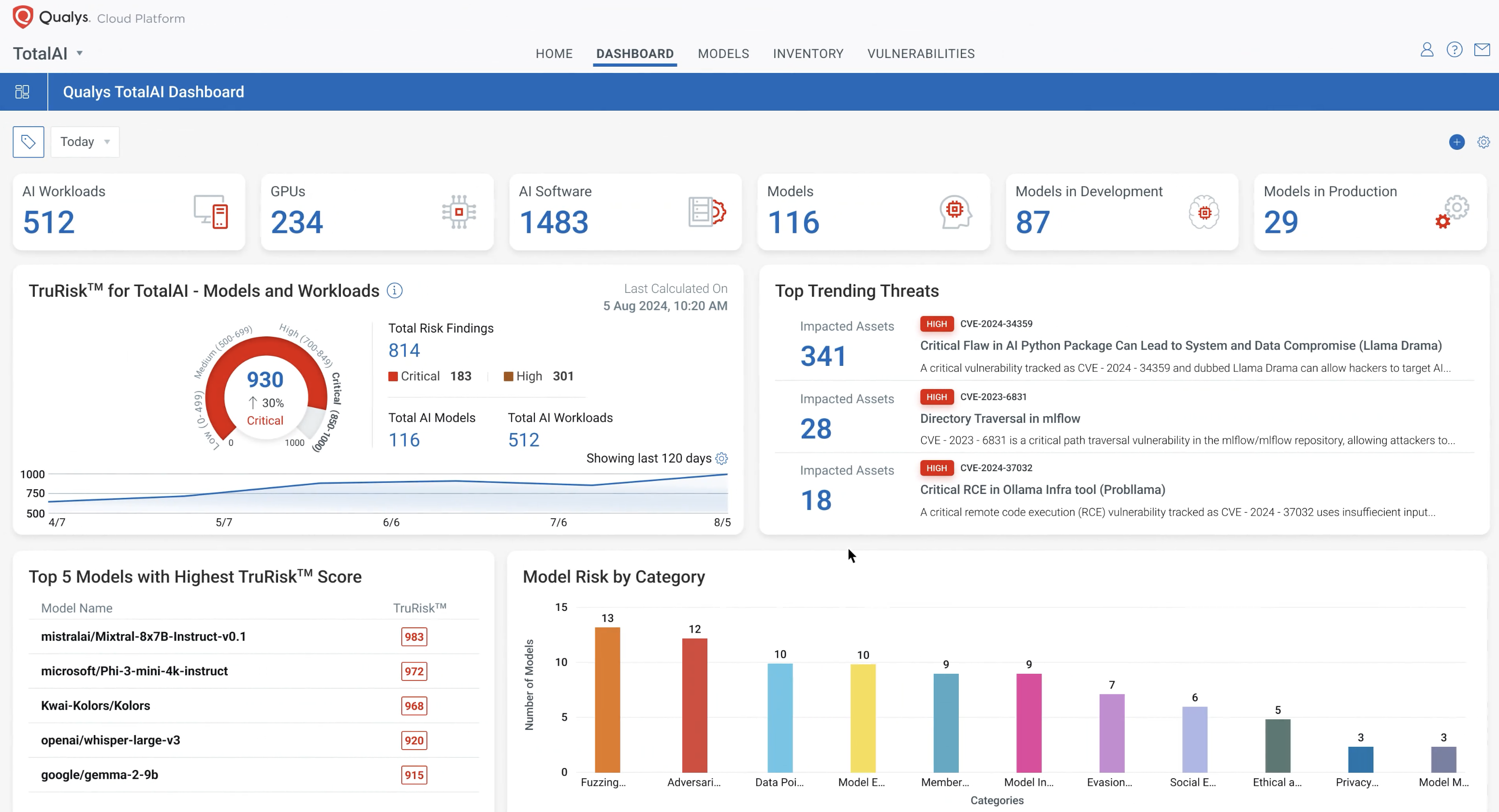

TotalAI offers automatic prioritisation of AI Security Risks. Findings are mapped to real-world adversarial tactics with MITRE ATLAS and automatically prioritised through the Qualys TruRisk™ scoring engine, helping security, IT and MLOps teams zero in on the most business-critical risks.

“AI is reshaping how businesses operate, but with that innovation comes new and complex risks,” said Sumedh Thakar, president and CEO of Qualys. “TotalAI delivers the visibility, intelligence and automation required to stay agile and secure, protecting AI workloads at every stage… from development through deployment. We are proud to lead the way with the industry’s most comprehensive solution, helping businesses innovate with confidence, while staying ahead of emerging AI threats.”

With the new internal on-premises LLM scanner, organisations can now incorporate comprehensive security testing of their LLM models during development, staging and deployment without exposing models externally. This shift-left approach, incorporating security and testing of AI-powered applications into existing CI/CD workflows, strengthens both agility and security posture, while ensuring sensitive models remain protected behind corporate firewalls.

Model resilience

TotalAI now expands to detect 40 different attack scenarios, including advanced jailbreak techniques, prompt injections and manipulations, multilingual exploits and bias amplification. The expanded scenarios simulate real-world adversarial tactics and strengthen model resilience against exploitation, preventing attackers from manipulating outputs or bypassing safeguards.

Also here we find protection from Cross-modal Exploits with Multimodal Threat Coverage: TotalAI’s enhanced multimodal detection identifies prompts or perturbations hidden inside images, audio and video files that are designed to manipulate LLM outputs, helping organisations safeguard against cross-modal exploits.