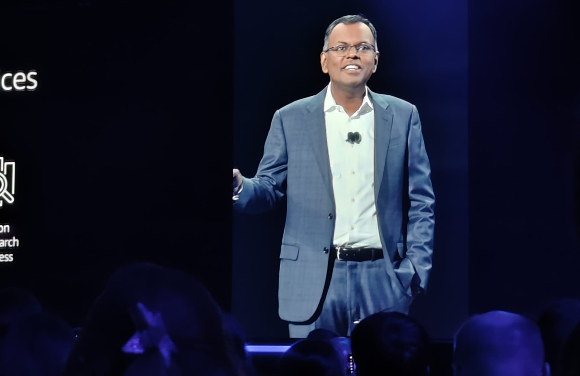

AWS re:Invent 2023 - Day two keynote, Dr Swami

If you want to come across as a cloud guru, then you better have a) a respected doctorate qualification, b) a senior data science-related position in a major hyperscaler organisation, or c) a name that denotes your status as a teacher, sage, mentor and thinker.

Only one person has all three.

Dr Swami Sivasubramanian is VP of data and Artificial Intelligence at AWS.

Welcoming AWS re:Invent 2023 attendees to this year’s conference and exhibition, Dr Swami suggested that generative AI is changing the relationship between humans and AI in a symbiotic way, somewhat reminiscent of the way we see similar parallels played out in the natural world.

But this is not a new connection point, Dr Swami recounted a brief history of computer science and said that we have always been looking to solve human problems through our work in software engineering – all the way back to the time of Ada Lovelace and Charles Babbage.

“Lovelace knew that computers can go way beyond number crunching, she saw that they could recognise ‘symbols’ and start to build up a level of intelligence from that point. But she made one thing very clear, she knew that machines could only create outputs based upon what humans instructed machines to do – so she understood the symbiotic fusion point from the start,” said Dr Swami.

Thinking about the relationship between data and gen-AI, he spoke about the processes now where users are looking to harness and organise their data in the new (deeper richer) information streams that flow through businesses.

For his money (and he stopped to remind us that more machine learning (ML) workloads run on AWS than on any other cloud provider), Dr Swami says that effective AI is only ever built when four cornerstones are in place:

- Foundation models

- A secure private environment in which to run them

- Easy-to-use tools to build gen-AI applications

- A purpose-built ML infrastructure

No surprise – the suggestion from Dr Swami and the team is that Amazon Bedrock is the foundation point for enabling access to all four of these elements. Amazon Bedrock is a service for building and scaling generative AI applications, which are applications that can generate text, images, audio and synthetic data in response to prompts.

Foundation proliferation

What’s happening now in this space is a focus on widening integration points and cross-platform support i.e Meta Llama 2 70B is now supported in Amazon Bedrock, as is Anthropic, the AI company behind the Claude AI model (now at version 2.0 iteration).

According to Anthropic, “Claude is a next-generation AI assistant based on Anthropic’s research into training helpful, honest, and harmless AI systems. Accessible through chat interface and API in our developer console, Claude is capable of a wide variety of conversational and text-processing tasks while maintaining a high degree of reliability and predictability.”

Dr Swami moved on to announce a set of expanded support developments in vector embeddings, a key function in vector databases which as we know are fundamental the operation of Large Language Models (LLMs) in the gen-AI space.

Accuracy, latency & cost

A number of other cloud engineers from AWS customers (including Intuit, a financial technology platform company) shared the stage with Dr Swami. The threads presented here centralised around the suggestion that gen-AI success really only comes when we can control accuracy (which of course only ever comes from data) as well as latency & cost, with those latter two factors very obviously being a factor of AWS services.

It seems very clear that there is no one-size-fits-all LLM solution, so an event like AWS re:Invent at this point in the decade naturally spent a lot of time focused on how we work with tools in this space.

As we use gen-AI fine-tuning to align foundational models to an organisation’s own business use cases, AWS tells us that Amazon Bedrock takes away a lot of the heavy lifting the infrastructure provisioning needed to perform this type of action. As has been discussed widely at this level, this task also includes use of Retrieval Augmented Generation (RAG). Developers working with AI applications can use RAG to retrieve data from outside a foundation model and augment prompts by adding the relevant retrieved data in context. The external data used to augment prompts can come from multiple data sources, such as databases, dedicated document repositories and perhaps APIs.

Dr Swami pointed to AWS Generative AI Innovator Center (a virtual initiative designed to encourage development and innovation in AI) where a new customer model program for Anthropic Claude has been newly announced.

AWS Generative AI Innovator Center

Announced in June of 2023 with a $100,000 investment from AWS behind it to support its creation and growth, the AWS Generative AI Innovator Center team of strategists, data scientists, engineers and solutions architects works with users to build bespoke solutions that harness gen-AI.

“It is critical that a business is able to store, access and work with high-quality data to work with generative AI,” said Dr Swami. “Although creating and operating a solid data foundation is not a new idea, but it does need to evolve today and embrace a comprehensive integrated set of data services that can account for the scale and type of use cases that will be dealt with here – and AWS has the broadest set of database services that can deliver every type of service at the point, all with the best price performance. Organizations also need tools to work with data and be able to catalogue and govern data too – and across all these areas AWS provides the tools to be able to execute these functions and tasks.”

… and today, I’m pleased to announce

A beefy two-hours in length, Dr Swami’s keynote was peppered with drumroll moments where he drew an extra breath and said… and today, I’m pleased to announce [insert new AWS service here] and it would be tough to list them all here.

Always engaging and compelling to listen to and worth the early mornng starts, Dr Swami is the keynote day 2 meat that comes after AWS CEO Adam Selipsky on day 1 and AWS CTO Dr Werner Vogels on day 3 – and he’s certainly well done.

Dr Swami Sivasubramanian is VP of data and Artificial Intelligence at AWS.