AI In Code Series: What has AI ever done for us (the developers)?

Artificial Intelligence has changed.

What started off somewhere around the 1970s or 1980s as an initial notion of human-like computer intelligence was almost before its time.

Hollywood may have been partly to blame, but the AI of the movies in the late part of the last century was exaggerated and (at that period at least) not practically or pragmatically possible.

As an academic discipline, Artificial Intelligence (AI) actually dates back to 1955, but the creation of ‘cognitive agents’ that would actually provide some level of functional use inside working applications came much later.

We would have to sit through the so-called AI-winter first and then wait for the advent of much cheaper processing power and storage closer to the end of the millennium.

The three ages of AI

So AI has changed… it has come out of its prototyping theoretical period (1950s) and come through its Hollywood glamorisation period (2001: A Space Odyssey, Westworld, Bladerunner, The Terminator, Short Circuit and The Matrix, in strict date of release order) and, further, it has come through its ‘we know how to do this, but the web is new, data ubiquity isn’t here yet and the cloud is still in its early stages’ period.

That last ‘we know it, but we can’t do it’ stage of AI evolution was critical, not just because it allowed us to start working with cloud networks more natively and gaining access to wider pools of data… it also allowed developers to take a quantum leap (pun probably intended) forwards in terms of the complexity of the AI algorithms they would build.

So we’re arrived, here in 2020, with every vendor worth their salt now bidding to apply AI functions in the applications they deliver so that users can do things more intuitively, more autonomously & automatically and (of course) more intelligently.

But we don’t care about the users – okay we do, but hear us out – in this case we care about the developers building these new smarter applications… and so we ask: what has AI ever done for us (the programmers, developers, coders and software engineers of all disciplines)?

What has AI ever done for us developers?

As Computer Weekly’s Cliff Saran has already highlighted in ‘The art of developing happy customers’ here, we know that AI (in some form) has existed in developer tools for a while.

“Rule-based checking has existed since programmers began using compilers and high-level programming languages to develop applications. Compilers and static code analysis tools effectively check that the lines of code are constructed in the right format,” notes the above linked feature.

This August 2020 Computer Weekly analysis piece quotes PayPal chief technology officer (CTO) Sri Shivananda on this exact subject.

Shivananda believes that AI could be trained to create some applications.

“Code is made of building blocks, which can be built on to make large, complex systems. As such, a programmer could write a script to speed up a repetitive task. This may be enhanced into a simple app. Eventually, it could become a payment system. At the very bottom of the software stack, there will be a database and an operating system,” said Shivananda.

His logic is that AI can assist (and should assist more) in coding. Why, because developers can create knowledge in code and dynamic logic, but rule-based code writing can only go so far i.e. it now needs an additional leg-up to smartness beyond its initially deployed form.

Looking ahead then, we need to ask what AI can do for code logic, function direction, query structure and even for basic read/write functions… what tools are in development?

In this age of components, microservices and API connectivity, how should AI work inside coding tools to direct programmers to more efficient streams of development so that they don’t have to ‘reinvent the wheel’ every time?

What are the developer pain points that AI can help with… is debugging ripe for AI and/or should log file analytics provide more insight into the health of applications and provide AI-style ‘there’s a problem here’ alerts?

If a service mesh exists to deliver an in-application layer of infrastructure intelligence in order to connect, corral and manage service-to-service connectivity between microservices and connections to APIs (and to also perform service discovery, load balancing, encryption, observability, traceability, authentication and authorisation)… then should we think of that as an element of AI at the coding tool level?

When we do create these AI functions, how will developers know what’s out of fashion, less useful than something else or on the verge of becoming a legacy tool that won’t future-fit next generation systems?

We need to know how AI will find itself embedded into the tools that developers use everyday… only then, surely, will the programmers themselves be able to build really smart applications with AI at the user-facing surface, right?

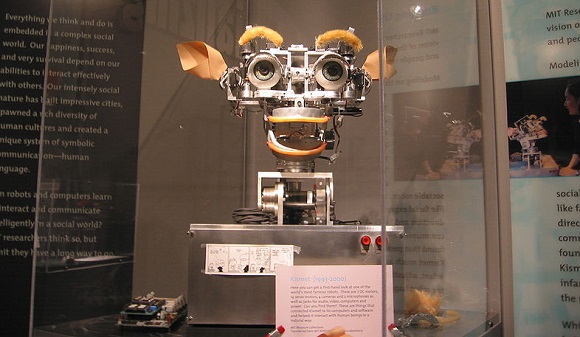

Kismet robot at MIT Museum – free image source: Wikipedia