svedoliver - Fotolia

Brain researchers get NVMe-over-RoCE for super-fast HPC storage

French neurological researchers deploy Western Digital OpenFlex NVMe array for super-fast HPC storage with Ethernet-based NVMe-over-Fabrics connectivity across several floors

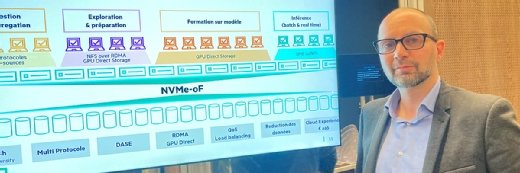

The Paris Brain Institute (ICM) has deployed an OpenFlex E3000 all-flash array from Western Digital with NVMe media and NVMe-over-Fabrics RoCE connectivity out to servers.

The solution offered the twin benefits of being very fast – it’s not an ordinary storage area network (SAN) – and being able to deliver input/output (I/O) across several floors at the ICM’s premises for its high-performance computing (HPC) needs.

The ICM was formed in 2010 to bring together the work of 700 researchers. Information collected during medical imaging and microscopy undergoes some processing at work stations, but between data capture and analysis the data is also centralised in the basement datacentre at the ICM.

“The challenge with the deployment is that to traverse the floors that separate the labs and the datacentre, traffic has to travel via Ethernet cables and switches that also handle file shares,” says technical lead at the ICM, Caroline Vidal.

“Historically, an installation like this would have used NAS [network-attached storage] storage, which wouldn’t really have the performance to match the read and write speeds of the instruments. With the latest microscopes, data takes longer and longer to save, then to make it available at workstations means researchers sometimes waiting hours in front of their screens.”

“Initially, we chose a NAS from Active Circle that had a number of features we considered essential, such as data security. But we came to realise that losing data wasn’t really an issue for our researchers – the real pain point was the wait to get to their data.

“By 2016, we’d decided to abandon the NAS and to share all findings via the Lustre storage on our supercomputer, because it is built for rapid concurrent access,” says Vidal.

Like other research institutes, the ICM’s datacentre is built around its supercomputer. Data being processed is stored in a Lustre file system cluster then archived in object storage, with data in use by scientists made available from a NAS.

But after three years, that was it. The 3PB of capacity on the Lustre file system was saturated with observation data. There just wasn’t any more room for any more.

NVMe/RoCE: speed of a SAN, easy deployment like NAS

Vidal adds: “In 2019, we started to think about decentralising storage from the workstations in the sense of distributing all-flash storage between floors. The difficulty was that our building isn’t well-adapted to deploying things in this way. We would have needed mini datacentres in our corridors, and that would have meant a lot of work.”

So, one of Vidal’s technical architects approached Western Digital, which proposed ICM carried out a proof-of-concept of a then-unreleased NVMe-over-fabrics solution.

“What was interesting about the OpenFlex product was that, with NVMe/RoCE, it would be possible to install it in our datacentre and to connect it to workstations on a number of floors via our existing infrastructure” says Vidal.

“Physically, the product is easier to install than a NAS box. It is also faster than the flash arrays we would have deployed right next to the labs.”

NVMe-over-fabrics is a storage protocol that allows NVMe solid-state drives (SSDs) to be treated as extensions of non-volatile memory connected via the server PCIe bus. It does away with the SCSI protocol as an intermediate layer, which tends to form a bottleneck, and so allows for flow rates several times faster compared to a traditionally connected array.

NVMe using RoCE is an implementation of NVMe-over-Fabrics that uses pretty much standard Ethernet cables and switches. The benefit here is that this is an already-deployed infrastructure in a lot of office buildings.

NVMe-over-RoCE doesn’t make use of TCP/IP layers. That’s distinct from NVMe-over-TCP, which is a little less performant and doesn’t allow for storage and network traffic to pass across the same connections.

“At first, we could connect OpenFlex via network equipment that we had in place, which was 10Gbps. But it was getting old, so in a fairly short time we moved to 100Gbps, which allowed OpenFlex to flex its muscles,” says Vidal.

ICM verified the feasibility of the deployment with its integration partner 2CRSi, which came up with the idea of implementing OpenFlex like a SAN in which the capacity would appear local to each workstation.

“The OpenFlex operating system allows it to connect with 1,000 client machines,” says 2CRSi technical director Frédéric Mossmann. “You just have to partition all the storage into independent volumes, with up to 256 possible, and each becoming the drive for four work stations. Client machines have to be equipped with Ethernet-compatible cards, such as those from Mellanox that communicate at 10Gbps to support RoCE.”

Vidal adds: “We carried out tests, and the most prominent result was latency – which was below 40µs. In practice, that allows for image capture in a completely fluid manner so a work station can view sequences without stuttering.”

Open system

The E3000 chassis was deployed at the start of 2020 and occupied 3U of rack space. Five of its six vertical slots are provided with 15TB NVMe modules for a total of 75TB. According to Western Digital, each of these offers throughput of 11.5GBps for reads and writes with around 2 million IOPS from each.

All these elements are directed by a Linux controller accessible via command line or from a Puppet console when partitioning drives or dynamically allocating capacity to each user.

“One thing that really won us over is the openness of the system. We are very keen on free technologies in the scientific world,” says Vidal.

“The fact of knowing that there is a community that can quickly develop extensions for use cases that we need, but also that any maker can provide compatible SSD modules, reassures us even though we have chosen a relatively untested innovative solution,” adds Vidal, explaining how the ICM is playing the part of a test case for OpenFlex.

At ICM, OpenFlex supports SSD modules that can expand in raw capacity to 61.4TB. At the back end, each SSD module has two 50Gbps Ethernet ports in QSFP28 optical connector format.

“The array offers a multitude of uses,” says Vidal. “While waiting to modernise our Ethernet infrastructure, we have connected OpenFlex with several client machines. In time, we will connect it to diskless NAS for backup in the labs. These are connected to work stations via a traditional network so as to limit the expense of deploying Mellanox RoCE cards.

“At the same time, we have connected OpenFlex to the rest of the datacentre to validate that we can provide Lustre metadata during heavy processing.”

Vidal says the Covid-19 pandemic has slowed down the deployment, but she has already seen benefits.

“Our scientists are not limited by the slow speed of data movement in their clinical analysis pipeline. They can now work on images with a resolution 4x to previously. We don’t doubt that this will help to deepen the understanding of neurological illnesses and to help the rapid introduction of new treatments,” she adds.

Read more about HPC storage

- How the University of Liverpool balances HPC and the cloud. The University of Liverpool has been running a hybrid HPC environment since 2017, which uses PowerEdge nodes and AWS public cloud services.

- Panasas storage revs up parallelisation for HPC workloads. Panasas storage software focuses on novel method to boost capacity and efficiency. Dynamic Data Acceleration in PanFS shuttles data to disk or flash based on file size.