Confluent Current 2023: Day two keynote

Confluent hosted day two of Current 2023 ‘next generation of Kafka Summit’ in San Jose, California this week.

The Computer Weekly Open Source Insider team were in residence and ready to download – eschewing offers of tequila at the previous night’s show party and with a remarkably clear head, we headed in.

The session itself was entitled Kafka, Flink & beyond.

In this keynote, leaders from the Kafka and Flink communities highlighted recent contributions, upcoming project improvements and the applications that users are building to shape the future of data streaming.

Introduced by Danica Fine in her role as senior developers advocate at Confluent, the conversation turned to Apache Flink.

Peanut butter & jelly

Ismael Juma, senior principal engineer at Confluent was joined by Martijn Visser, senior product manager at Confluent. Both spoke about where stream processing is going next. Visser reminded us that it’s all about transforming data into a different form and doing it in real-time with Apache Kafka and Flink, which he suggests go together ‘like peanut butter & jelly’ today.

Juma explained how the long data cycles of the past needed an evolution – the way we built for data at rest in the past simply does not fit the sophisticated application structures of today.

Turning to where Apache Flink can now really help produce low-latency responses, Visser said that the Flink community (with some 170 committers) has shown great diversity and superb data engineering competency that has helped ‘harden’ the technology and get it to where it is today.

“We all know Kafka is the foundation of the streaming world, but our work [here] is not done, there is still much that we can do with forward-looking developments that can make it even easier [with a key focus on making Kafka more elastic and easiest to operate] to use,” said Juma, who also pointed to KRaft in Apache 3.5, a technology that was announced at this event last year.

KRaft is a consensus protocol that was introduced to remove Apache Kafka’s dependency on ZooKeeper. Let’s see what Zookeeper is and why Kafka maintainers decided to not rely on it for metadata management.

Next up, Satish Duggana, senior staff engineer at Uber reminded us that Uber has a massive Kafka platform deployment serving millions of messages per day to drive and populate real-time events across the platform i.e. this is the part of Uber that underpins the ‘dynamic pricing’ capability that it uses – something us normal users know as ‘surge pricing’ as it can be quite painful at times.

Simplified protocols

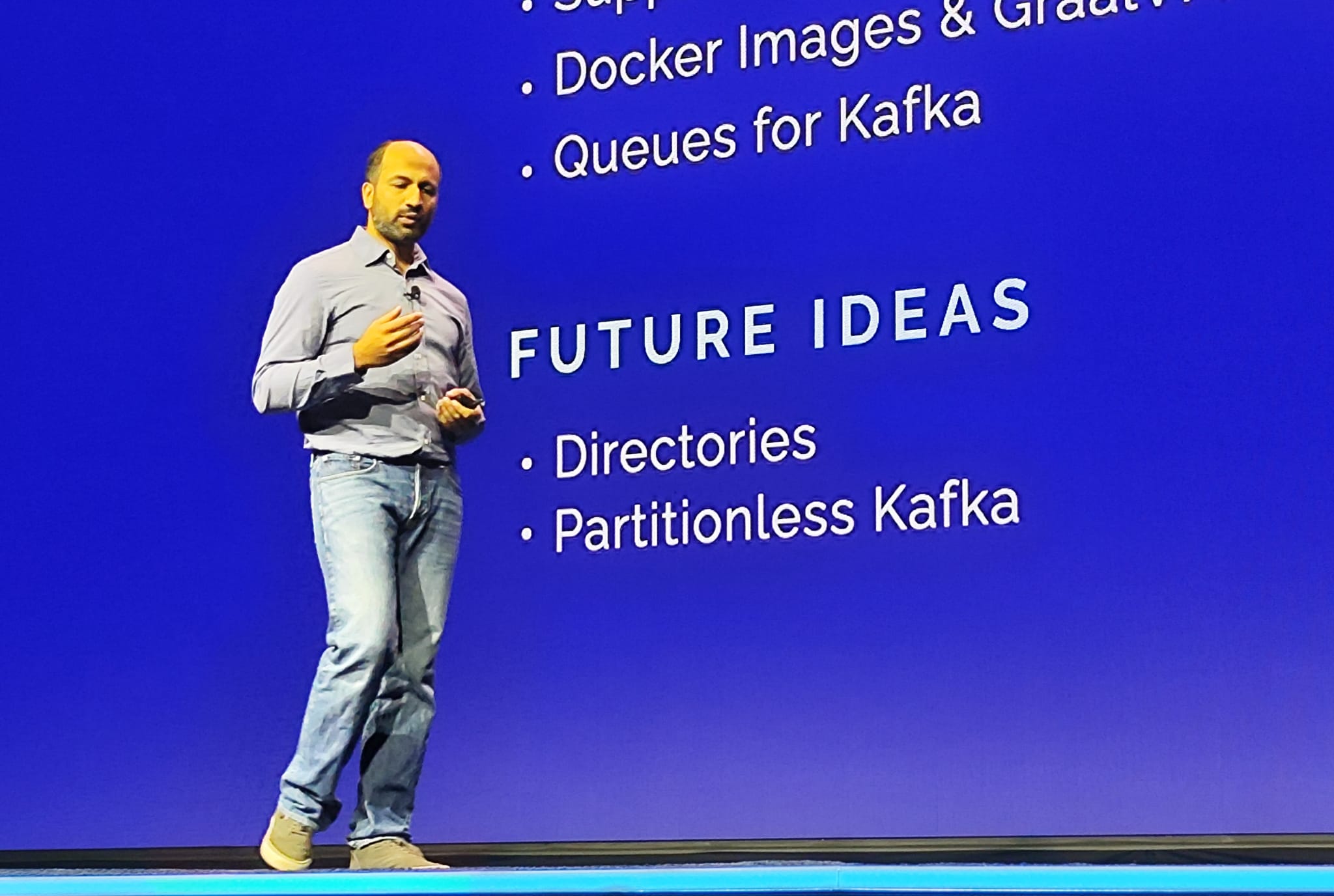

On the road to the future, Juma and team say that they are focused on creating a more simplified protocol (or set of protocols) that they know Kafka needs to deliver. “Development experience is critical for any software project, but this truth is even more so for an open source project like Apache Kafka,” he said.

Speakers compared the difference between message queuing and event streaming – and said that messages may be used in a queuing system in a certain way in (for example, an order processing application) and event streaming mechanics work in a different but related way.

Given that both techniques are useful, but Apache Kafka has a broader way of handling storage, software application developers building data streaming applications need to remember what tools they are using at any given moment in time and be aware of the capabilities and restrictions of the technologies at hand.

KIP – Kafka Improvement Proposal

The Confluent team talk about KIPs here in the context of KIP(s), or the process behind a Kafka Improvement Proposal.

According to the team, [they] want to make Kafka a core architectural component for users through the KIP process.

“We support a large number of integrations with other tools, systems and clients. Keeping this kind of usage health requires a high level of compatibility between releases — core architectural elements can’t break compatibility or shift functionality from release to release. As a result each new major feature or public api has to be done in a way that we can stick with it going forward,” notes the KIP explanatory overview.

The team say that this whole process means when making these kind of changes, they need to think through what the community is doing prior to release. The KIP overview also notes that all technical decisions have pros and cons, so it is important that developers capture the thought process that leads to a decision or design to avoid flip-flopping needlessly.

Customer speakers on day two also included Tobias Nothaft, sub-product owner streaming platform at BMW Group, plus Hitesh Seth, managing director of Chase Data, AI/ML platform architecture and engineering at JPMorgan Chase. Anna McDonald, technical voice of the customer at Confluent rounded out this portion of Current 2023.

Why it’s Apache Kafka

As a final note, for now, on this event. Confluent Jay Kreps (who, incidentally, appears to smile and enjoy his technology subject matter way more than 90% of other enterprise tech vendor leaders) noted that when faced with naming Apache, his co-innovators realised that they either had to go with an (arguably sterile) corporate three-letter-acronym name, or ‘something goofy’ as he put it. He’d read a lot of Harry Potter and Franz Kafka and for a while the whole development may have been known as something Potter-related (another project Voldermort does actually exist)… but you can see Franz won the day.

Confluent obviously called the Current event Current to convey the feeling of a current and constant flow of data inside streaming systems… happily, the company also served up currants at the breakfast granola stations and dried fruit does keep most people regular, nice touch guys – see you next year.

Ismael Juma, senior principal engineer at Confluent.