Maksim Kabakou - Fotolia

Security Think Tank: Design security in to reap container benefits

Provided container security basics are built into your development and runtime environment from the start, containerised services and applications can provide rapid – and secure – achievement of business objectives

Containers have quickly become a fundamental part of DevOps. Their lightweight and portable nature makes them attractive to all sizes of organisation, from small startups trying to reduce costs to large multibillion-pound companies wanting to ensure service availability.

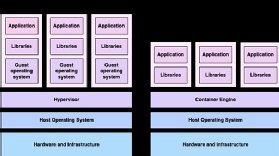

Compared with traditional hypervisor-based virtualisation, containers are not weighed down by emulated hardware, or the bloat that comes from replicating an entire operating system. This makes containers faster to spin up, easier to transport and considerably more scalable.

As a result, virtual machines are being overshadowed by the widespread adoption of containers, and the trends appear to show no sign of this popularity slowing.

Design is key in bringing scalability, speed and security advantages

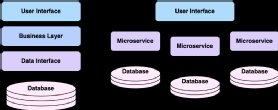

According to research by DataDog, the number of Kubernetes pods being deployed doubled between 2019 and 2021. This, combined with the increase in stateful containers, implies that more organisations are migrating their traditional monolithic applications into Kubernetes, which for many, means moving to a microservice-based development approach.

Shifting to a microservice architecture can provide more flexibility and, if managed correctly, greater security. This is due to the nature of the architecture, where each service is given resources to meet its own requirements and is treated as a separate entity. This allows services to be edited or removed with little impact on the rest of the application.

An attacker who gains access to a monolithic application is likely to have access to all the resources within it, but in a containerised solution, they may be restricted to a single pod. Containers also allow continuous re-paving of the application, ridding it of vulnerabilities with little impact on user experience.

One thing to consider when increasing the number of pods in any given cluster is the danger of container sprawl. Having separate containers for everything can be attractive for the reasons mentioned above, but this can be overdone. Too many containers can lead to a lack of observability and make troubleshooting difficult. This can create unintentional attack paths as malicious users may exploit legacy containers that have been forgotten about.

Investment in security by cloud providers

Another recent trend, which feels like a natural result of increased container adoption, is the shift towards more managed container solutions and using products such as AWS Fargate. Container orchestration solutions, such as Kubernetes, are famously complicated to maintain and often require a large investment of time to set up clusters competently.

Having your cluster orchestrated for you by a cloud provider makes deployment quicker and easier than traditional methods of self-management. However, you are always bound by the shared responsibility model of providers, and container security will always be your team’s responsibility.

Common vulnerabilities and exposures

The appeal of containers being “able to run on anyone’s machine” is a double-edged sword because it means they are also vulnerable on anyone’s machine. Maybe in some part due to their ease and popularity, containers are often targets for attackers and all software relating to them has a considerable number of common vulnerabilities and exposures (CVEs). These vulnerabilities can lie in any part of the container, from the image to the runtime itself, to third-party applications running inside.

A key example of this is to look at the CVEs for Docker in 2020. All 32 of these have a Common Vulnerability Scoring System (CVSS) score of 10.0 and relate to the same issue for separate official Docker images. All of these images had no admin passwords by default; if a developer decided not to set their own security settings on these images, then a malicious user could easily escalate their privileges to exploit the entire cluster.

Even when considering images from reputable repositories, many third-party libraries may be pulled in, leading to a heightened supply chain risk. For these situations, having controls in place to dismantle, create a software bill of material (SBOM) and check each component, becomes a mandatory requirement.

Also, development pipelines must automatically check that containers are being developed securely and, where necessary, enforce the organisation’s security policy. This all needs to be carried out autonomously, relying heavily on guardrail implementation, security automation using policy agents and runtime enforcement.

Design security in to reap the full benefits of containers

Containers aren’t going away any time soon – and this is not a bad thing. Containerisation provides many benefits to all manner of applications, but security must not be sacrificed for convenience. Organisations should ensure that, regardless of how their containers are managed, they understand and carefully build the right environment, with the right controls in place to manage risk.

Basic measures such as using verified third-party software/base images, and/or signed images and libraries, ensuring deep observability and automated updates ensure there aren’t any “weak link” containers that may provide a pivot point for attackers.

Provided container security basics are built into your development and runtime environment from the start, containerised services and applications can provide rapid (and secure) achievement of business objectives.

Mairi MacLeod and Farrukh Ahmad are cyber security experts at PA Consulting