Fractal Pictures - stock.adobe.c

EU AI Act explained: What AI developers need to know

A guide to help enterprises building and deploying AI systems understand EU AI regulations

The European parliament approved the European Union (EU) AI Act in March 2024, and its goal is to provide artificial intelligence (AI) developers and deployers with what EU policymakers describe as “clear requirements and obligations regarding specific uses of AI”.

They also hope regulation will reduce administrative and financial burdens for business, in particular small and medium-sized enterprises (SMEs).

The Act aims to provide a regulatory framework for operating AI systems with a focus on safety, privacy and preventing the marginalisation of people. There are a number of AI deployment use cases that are excluded from the Act. However, some of its critics believe there are too many loopholes, particularly around national security, that may limit its effectiveness.

The reason the EU and other jurisdictions are looking at how to address AI safety concerns is because such systems may be trained on data that can change over time.

The Organisation for Economic Cooperation and Development’s (OECD’s) AI group warns that AI-based systems are influenced by societal dynamics and human behaviour.

AI risks – and benefits – can emerge from the interplay of technical aspects combined with societal factors related to how a system is used, its interactions with other AI systems, who operates it, and the social context in which it’s deployed.

Broadly speaking, AI legislation is focused on the responsible use of AI systems, which includes how they are trained, data bias and explainability, where errors can have a detrimental impact on an individual.

The OECD.ai policy observatory’s Artificial intelligence risk management framework notes that the changes occurring in AI training data can sometimes unexpectedly affect system functionality and trustworthiness in ways that are hard to understand. AI systems and the contexts in which they are deployed are frequently complex, making it difficult to detect and respond to failures when they occur, the OECD.ai policy observatory warns.

The EU AI Act takes a risk-based approach to AI safety, with four levels of risk for AI systems, as well as an identification of risks specific to a general purpose model.

It’s part of a wider package of policy measures to support the development of trustworthy AI, which also includes the AI Innovation Package and the Coordinated Plan on AI. The EU says these measures will guarantee the safety and fundamental rights of people and businesses when it comes to AI, and will strengthen uptake, investment and innovation in AI across the EU.

Implications of EU AI Act categorisation

The EU AI Act provides a regulatory framework that defines four levels of risk for AI systems: unacceptable risk; high risk; limited risk; and minimal risk.

- Unacceptable risk: AI systems that are considered a clear threat to the safety, livelihoods and rights of people are banned. Such systems include those used for social scoring by governments to toys using voice assistance that encourages dangerous behaviour.

- High risk: Developers need to prove they have in place adequate risk assessment and mitigation systems. The EU AI Act also stipulates that they ensure high-quality datasets are used to feed the AI system and logs of activity need to be in place to ensure traceability of results. In addition, the EU AI Act requires that developers keep detailed documentation providing all information necessary on the system and its purpose for authorities to assess its compliance.

- Limited risk: Developers building these systems need to ensure transparency. The EU AI Act states that when people use AI systems such as chatbots, they should be made aware that they are interacting with a machine so they can take an informed decision to continue or step back. The Act also stipulates that providers of such systems have to ensure that AI-generated content is identifiable.

- Minimal or no risk: The EU AI Act allows the free use of minimal-risk AI systems so developers generally do not have to take additional steps to ensure the systems they deploy are compliant. Such AI software includes AI-enabled video games or spam filters.

Key takeaways from the EU AI Act

The Act provides a set of guardrails for general purpose AI. Developers of such systems need to draw up technical documentation to ensure the AI complies with EU copyright law, and share detailed summaries about the content used for training. It sets out different rules for different levels of risk.

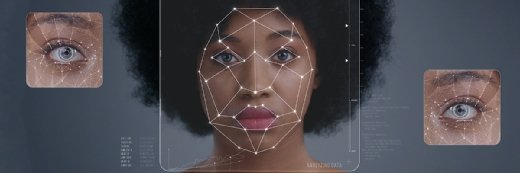

In the “unacceptable risk” category, the Act has attempted to limit the use of biometric identification systems by law enforcement. Biometric categorisation systems that use sensitive characteristics (for example, political, religious and philosophical beliefs, sexual orientation, and race) are prohibited.

High-risk AI covered by the Act includes AI systems that are used in products falling under the EU’s product safety legislation. This includes toys, aviation, cars, medical devices and lifts. It also covers AI used in a number of decision-support roles such as law enforcement, asylum, migration and border control, education, employment, and the management of critical national infrastructure.

Products falling into the high-risk category need to be assessed before being put on the market, and also throughout their lifecycle. The Act gives people the right to file complaints about AI systems to designated national authorities. Generative AI (GenAI), such as ChatGPT, is not classified as high-risk. Instead, the Act stipulates that such systems need to comply with transparency requirements and EU copyright law.

Who is affected by the EU AI Act?

Stephen Catanzano, senior analyst at Enterprise Strategy Group, notes that the AI Act applies to providers of AI systems. This, he says, means it covers companies that develop and market AI systems or provide such systems under their own name or trademark, whether for payment or free of charge.

Additionally, Catanzano points out that the AI Act covers importers and distributors of AI systems in the EU, and also extends to “deployers”, which is defined as natural or legal entities using AI under their authority in their professional activities.

Principal analyst Enza Iannopollo says that despite the lack of details around technical standards and protocols, at a minimum, she urges organisations to prepare to measure and report on the performance of their AI systems. According to Iannopollo, this is arguably one of the main challenges of the new requirements.

“Companies must start with measuring the performance of their AI and GenAI systems from critical principles of responsible AI, such as bias, discrimination and unfairness,” she wrote in a blog post covering the Act.

As new standards and technical specifications will emerge, the wording in part of the Act is expected to be extended.

How does the EU differ from other regions in AI regulation?

Whatever region an organisation operates in, if it wants to deploy AI somewhere else, it needs to understand the regulatory framework both in its home country and in the other regions where the system will be used. At the time of writing, the EU has taken a risk-based approach.

However, other countries have taken different approaches to AI regulations. While different jurisdictions vary in their regulatory approach, in line with different cultural norms and legislative contexts, EY points out they are broadly aligned in recognising the need to mitigate the potential harms of AI while enabling its use for the economic and social benefit of citizens as defined by the OECD and endorsed by the G20. These include respect for human rights, sustainability, transparency and strong risk management.

The UK government’s AI regulatory framework outline, published in February, puts forward a “pro-innovation” approach. It’s promising to see this awareness around the need for guidelines, but the non-legislative principles are focused on the key players involved in AI development.

To encourage inclusive innovation, the UK must involve as many voices as possible when shaping the regulatory landscape. This, according to Gavin Poole, CEO of Here East, means policymakers and regulators need to engage actively not only the big tech corporations, but startups, educational institutions, and smaller businesses as well.

“The EU passing its ground-breaking AI Act is a wake-up call for the UK – a reminder that we must adapt and evolve our guidelines to stay ahead of the curve,” he says. “The ideal scenario is a framework that fosters growth and invests in local talent and future potential, to further cement our position as a global leader in AI innovation.”

The importance of reciprocal influence

The EU approach is very different to that of the US. This, says Ivana Bartoletti, chief privacy and AI governance officer at Wipro, is actually a good thing. “Cross-fertilisation and reciprocal influence are needed as we work out the best way to govern this powerful technology,” she says.

As Bartoletti points out, while the US and EU legislations diverge somewhat, the two strategies show convergence on some key elements. For instance, there is a focus on fairness, safety and robustness. There is also a strong commitment and solid actions to balance innovation and the concentration of power in large tech companies.

China is also making substantial progress on AI regulation. Its approach has previously focused on specific areas of AI, rather than on building a comprehensive regulatory regime for AI systems. For example, Jamie Rowlands, a partner, and Angus Milne, an associate at Haseltine Lake Kempner, note that China has introduced individual legislation for recommendation algorithms, GenAI and deepfakes. This approach, they say, has seemingly been reactive to developments in AI, rather than proactively seeking to shape that development.

In June 2023, China confirmed it was working on an Artificial Intelligence Law. It’s not currently clear what will be covered by the new law as a draft is yet to be published, but the expectation is that it will seek to provide a comprehensive regulatory framework and therefore potentially rival the EU’s AI Act in terms of breadth and ambition.

Systems excluded from the EU AI Act

- AI developed exclusively for military purposes.

- AI used by public authorities or international organisations in third countries for law enforcement or judicial cooperation.

- AI research and development.

- People using AI for personal, non-professional activity.

What is the impact on the European AI sector?

The German AI Association has warned that in its current form, the EU AI Act would seriously negatively affect the European AI ecosystem and potentially result in a competitive disadvantage, particularly to US and Chinese competitors.

In a paper discussing its position, it warned that an exclusion of general purpose AI and large language models, or at least from a categorical classification of such models as high-risk use cases, is necessary.

“We call for a reevaluation of the current requirements that classify AI systems as high-risk use cases, protection and promotion of European investments in large language models and multimodal models, and a clarification of vague definitions and chosen wordings,” the authors of the paper wrote.